Does Tesla's software have a pedestrian problem? Part 2

A look at vague claims by Taylor Ogan

This is a continuation of the piece I published yesterday. For context on what I’m covering here, I suggest reading at least the beginning of that if you haven’t already. Most of that article is dedicated to assessing the claims made by Dan O’Dowd about Tesla’s FSD Beta.

In this continuation, I will focus on the claims made by Taylor Ogan which are far less specific. Taylor is another Twitter personality who is relatively well-known within the Tesla community. Taylor has been a vocal critic of Tesla, especially over the past year or so.

Claim 2: “Teslas still aren’t stopping for children”

Taylor’s claim was initially challenging to define, since Taylor did not at first make any specific claims or make any details of the purported “test” available. Instead, he simply tweeted this, which contains a video of a Tesla crashing into a mannequin, similar to the one we saw Dan O’Dowd use in part 1:

Looks and sounds bad, right?

At this time, this tweet has over 30,000 retweets (+ quote tweets) and over 200K likes. That’s quite a lot of attention! Many seem to be interpreting this as a test of the Full Self Driving Beta, while others are assuming it is a test of the production Autopilot features. So which is it?

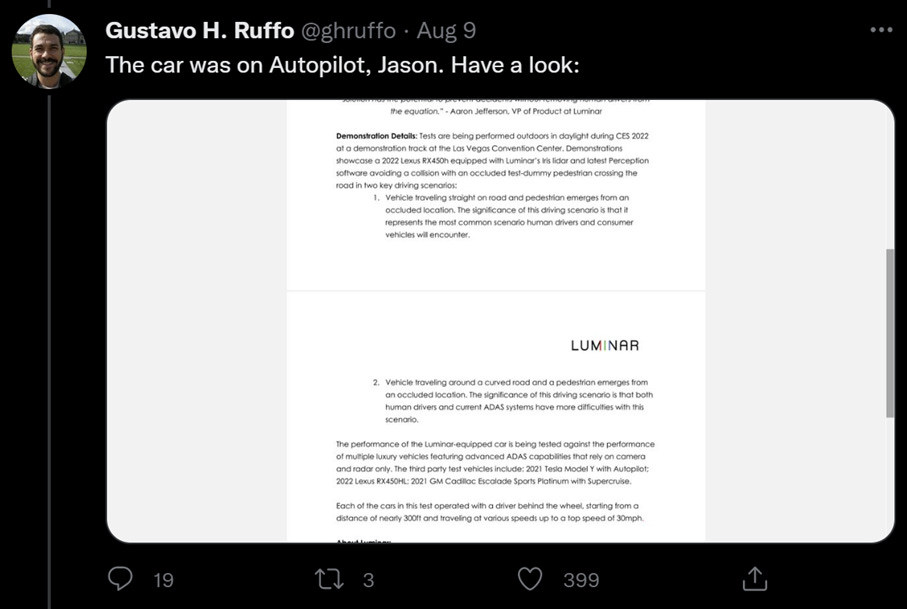

A follow-up tweet by Gustavo H. Ruffo, a blogger at Auto Evolution, responded with this claim that the test vehicle shown in the video was on Autopilot. He also shows an excerpt of a document produced by Luminar – a LiDAR manufacturer apparently responsible for this “test” at a marketing event of theirs. However, the document appears to be a description of different tests versus what is depicted in the video, and the only mention of Autopilot is in the description of the Tesla vehicle itself, a “Tesla Model Y with Autopilot”.

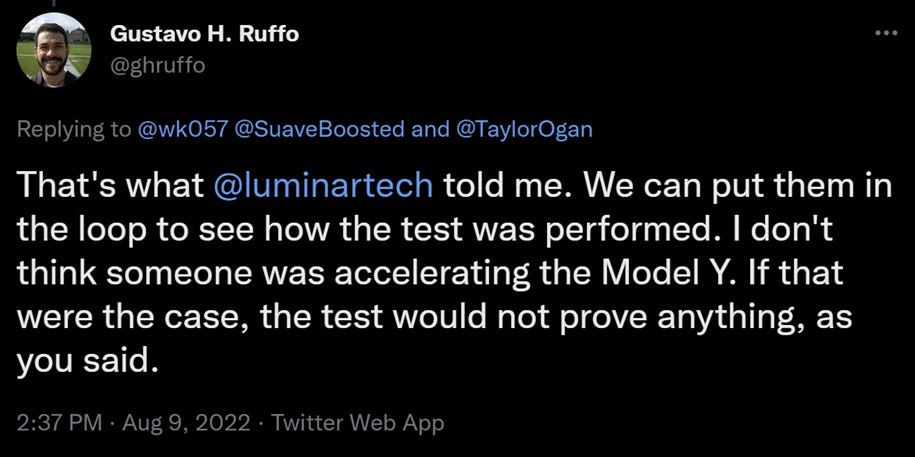

Gustavo followed up with a particularly interesting reply to someone who challenged the idea that Autopilot was in use in the video Taylor had posted:

Keep that last sentence in mind. It will be important later.

In response to someone else asking about the settings used during the test, Taylor tweeted this video which he says he took from inside the test vehicle during this specific test:

In the video, we can see that the vehicle is not using any “FSD” or Autopilot modes. Instead, we see the driver, with hands on the wheel, accelerate aggressively toward the mannequin. During Taylor’s video, the audio “mysteriously” cuts out during the middle section. Taylor initially blamed this on Twitter video compression, which is of course nonsense. I immediately suspected that the audio was removed in order to hide the alarm sounds the car was likely making during the test, in the form of Forward Collision Warnings.

I reached out to Taylor first via Twitter replies and then via DM. He eventually responded to the latter. One thing he clarified is that this test does not involve Autopilot (or “FSD Beta”), and that it is intended as a test of Automatic Emergency Braking. I asked him to reply to Gustavo to clear up his misunderstanding, which he did:

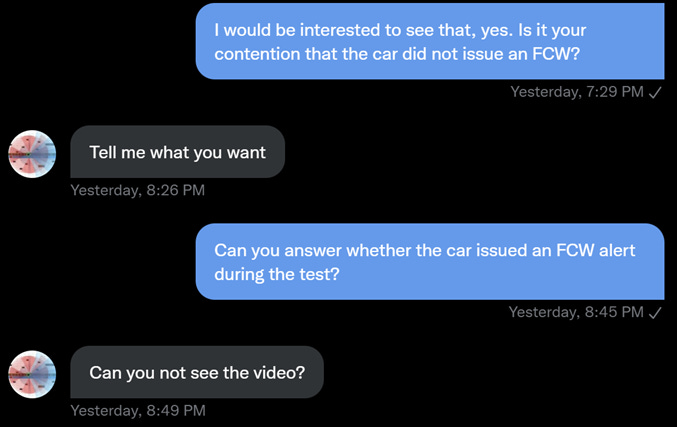

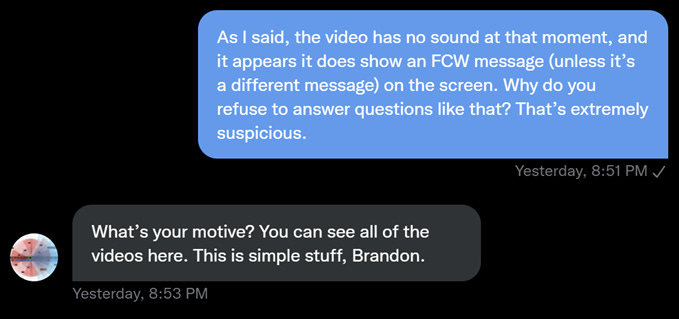

I also asked him about the Forward Collision Warnings, and whether the car issued any during the test. He refused to answer the question, instead deflecting each time:

Later on I pressed him on this point again, and he again deflected – copying and pasting the same response:

It seems clear to me from this exchange that Taylor wants to mislead people into thinking the Tesla ADAS did not see the mannequin or react to it in any way, yet the truth is that it did. He doesn’t want to straight-up lie and say that Forward Collision Warning did not kick in, so instead he hides it (by cutting out the audio in the video he shared) and just refuses to answer the question.

UPDATE: On August 17th, Taylor reached out to me and provided the full video of the run. It does not reveal an audible Forward Collision Warning happening, though it does still show a message appearing on the screen which cannot be made out. I still don’t know why he refused to answer my questions about FCW or why he provided an edited video with missing audio in the first place.

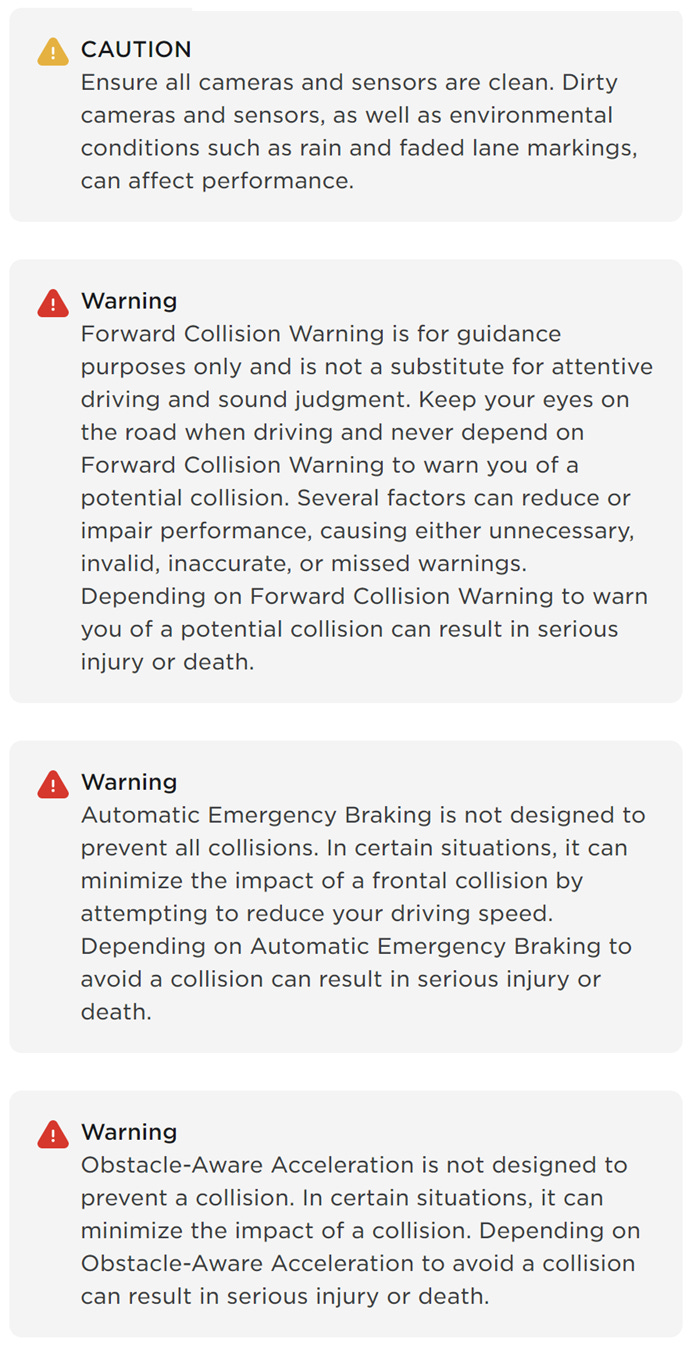

Taylor repeatedly emphasizes that Automatic Emergency Braking did not kick in and stop the car. Is it reasonable to expect it should? To answer that, let’s look at the Tesla Model Y user manual. First, let’s see what it says about the collision avoidance features offered:

We’ve established that Forward Collision Warning seemed to work as expected.

UPDATE: As mentioned above, Taylor later provided evidence that FCW did in fact not fire. However, a message (possibly an error with the active safety systems) was displayed on the screen, and the other questions and concerns covered in this article are still valid.

But what about Automatic Emergency Braking? It seems clear from the manual that it is not designed to prevent all collisions. But shouldn’t it have kicked in and slowed or stopped the car in this case?

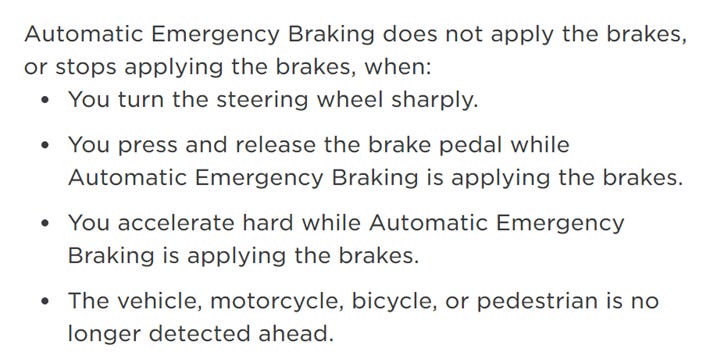

Let’s see what else the manual has to say about AEB in particular:

Based on the video, it seems very likely that at least the third bullet here is applicable. This makes sense, as Automatic Emergency Braking is designed to help avoid or reduce the severity of accidents. It is not designed to prevent the driver from intentionally driving into something, as was the case here. When a Forward Collision Warning is issued, and the driver (whose attention is ahead and hands are on the wheel) responds by accelerating – the system today defers to the driver, assuming they know better. This is how every ADAS system I’m aware of works, including the standard system in the Lexus vehicle also shown in the video.

In fact, Lexus has very similar language in this vehicle’s owners manual:

So why did this Lexus stop? Gustavo provided a likely explanation in the same Twitter thread. The Lexus was modified with a non-standard emergency braking system by Luminar, the ones running this demo of their LiDAR sensor technology. Since this was a custom AEB solution, they likely configured it to stop no matter what the driver did (this is assuming that both drivers indeed performed the same actions, of course).

As Gustavo correctly indicated in his earlier reply, the fact that this vehicle is being driven by a driver intentionally aiming at and accelerating toward the mannequin means that this “test” doesn’t prove anything. Or certainly not anything useful.

In my judgement, Taylor Ogan’s tweets about this “test” seem intended to deceive. He avoids making explicit claims, which perhaps helps him feel better about himself, but it’s clear now that he intentionally leads readers to draw certain incorrect conclusions, and then fails to refute them or to even answer questions asking him to clarify either his intent or key details of the purported test.

About as dishonest as was possible.

Ogan apparently happy to waste any credibility all at once.