Does Tesla's software have a pedestrian problem? Part 1

Recent claims have received a lot of attention - but do they stand up to scrutiny?

This week, videos of two purported tests of Tesla’s Advanced Driver Assistance Systems (ADAS) have been making the rounds, with the main commonality being that both seem to show Tesla vehicles hitting mannequins meant to look like small children. If Twitter is any indication, then these are both getting a lot of attention, and creating the impression that Tesla has a dangerous problem of some kind.

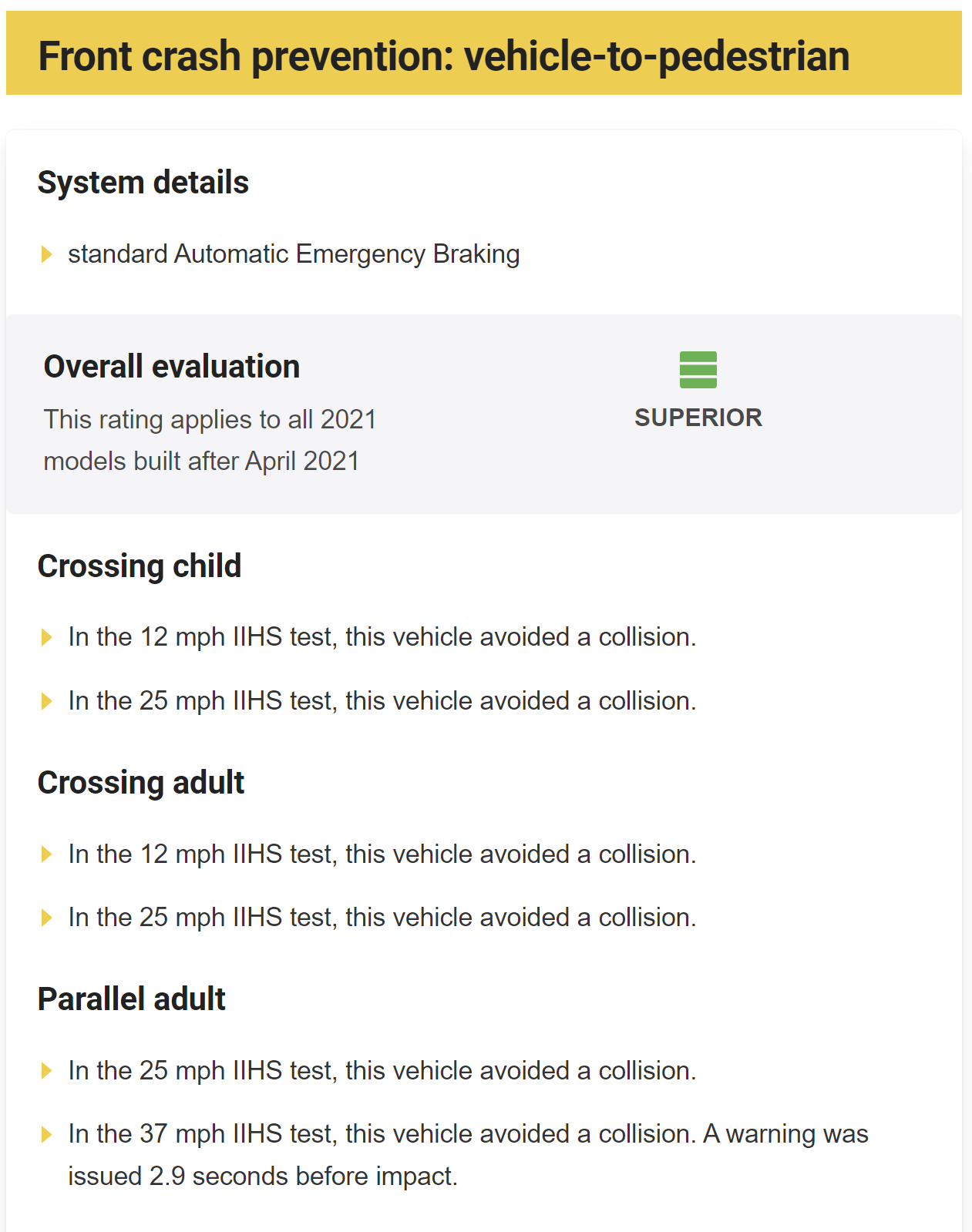

This is surprising to many, because independent tests by government agencies, industry groups, and journalists at reputable publications (e.g., Consumer Reports) have all reported that Tesla’s production software excels at handling exactly this kind of scenario. For example, the Insurance Institute for Highway Safety (IIHS) gives all tested Tesla vehicles their highest rating of “superior” for their active safety systems in the vehicle-to-pedestrian test category, which includes “crossing child” tests.

Do these newly publicized tests undermine those established results? Let’s take an objective look at the evidence, as well as the sources of these claims, and find out.

I’ll start with a claim made by Dan O’Dowd, which I’ll cover in this article. This became quite long, so I decided to split my analysis of the second claim - made by Taylor Ogan, into a separate “part 2” article.

To assess Dan and Taylor’s claims, it is important to understand what each of them are asserting. While the videos may look similar at first glance, it turns out they’re entirely different claims about entirely different things.

To set a bit of context, let’s quickly review the main buckets of relevant ADAS functionality offered by Tesla.

Active Safety Features - These are powered by the Autopilot system, but operate in the background while a human manually operates the car. They’re designed as a safety net, to help avoid or reduce the severity of accidents due to human error. This is what the IIHS was testing in the results shown above.

“Production” Autopilot Convenience Features - These include “basic” features like Traffic Aware Cruise Control and Autosteer, and more advanced features that are part of the Enhanced Autopilot package (or the all-inclusive “FSD Capability” package). These features are intended for use on highways, and require an attentive driver.

FSD Beta - This refers to a special beta program, and the software being tested in it. The program is currently testing a “City Streets” mode, which is capable of stopping at traffic lights and stop signs, taking turns at intersections, and generally performing most of the tasks needed to drive around a non-highway environment. However, the functionality being tested here is unequivocally classified as “Level 2” partial automation under the SAE J3016 standard. That means just like the regular Autopilot stuff, it requires an attentive driver.

I’m working on a more detailed Autopilot / FSD write-up covering the facts and fiction (including the good, bad, and the ugly) of these offerings, but hopefully this view helps to frame the discussion of the claims made by these individuals.

Claim #1: Tesla’s FSD Beta fails to detect and react to children in plain view in front of the vehicle

This claim comes from vocal anti-Tesla personality, CEO of Green Hills Software, and would-be political candidate Dan O’Dowd. Lest you think I’m being unfair in characterizing O’Dowd as an anti-Tesla personality, I would direct you to his Twitter bio, where he defines his identity around this characteristic:

I’d also point you to some examples of the material he posts on his account and websites:

Dan is not exactly an independent, unbiased researcher. Nor does he have experience or expertise in vehicle safety testing - or in driver assistance or automation systems, for that matter. His company does sell software to some manufacturers (notably, not Tesla) which they then use to develop competing systems.

I don’t intend this as an argument ad hominem. If Dan has evidence of a problem, we should take it seriously, regardless of his background or motivations. However, it is also important to consider the trustworthiness and relevant expertise of a source, especially when they ask you to take them at their word. So with that out of the way, let’s look at the claim and the evidence.

The specific claim made on his “Dawn Project” website is this:

Our safety test of the capability of Tesla’s Full Self-Driving technology to avoid a stationary mannequin of a small child has conclusively demonstrated that Tesla’s Full Self-Driving software does not avoid the child or even slow down when a child-sized mannequin is in plain view.

Our Tesla Model 3, equipped with the latest version of Full Self-Driving Beta software (10.12.2) repeatedly struck the mannequin in a manner that would be fatal to an actual child. The software is a demonstrable danger to human life and must be removed from the market immediately.

O’Dowd commissioned a TV commercial, viewable at the web page linked above, which makes this claim in an especially sensational fashion, and urges viewers to call their congressional representatives demanding that Tesla’s “FSD Beta” be banned from US roads.

Accompanying this video and web page, O’Dowd has published four additional artifacts:

A “raw footage” video, apparently showing an edited montage of additional clips from outside and inside the vehicle being tested

A “signed affidavit” from an observer, apparently in the passenger seat during the tests (and presumably the one recording the shaky in-car video)

Let’s review these to see what we can ascertain about the validity of O’Dowd’s claims.

First, let’s start from the premise itself. O’Dowd suggests that if Tesla’s “FSD Beta” software can crash into a child-like mannequin in the middle of a roadway, that it is a “danger to human life and must be removed from the market immediately”. However, remember what we established at the start: despite the potentially misleading “Full-Self Driving” name, the FSD Beta is not an autonomous driving system. It is an SAE Level 2 driver assistance system. A human driver is required and must pay attention, plus keep their hands on the wheel, ready to intervene at any time.

Tesla has a fairly aggressive Driver Monitoring System in place which combines steering wheel torque detection and an in-cabin camera to determine if drivers are not paying attention. For example, if the camera sees you holding a phone, looking away from the road, or interacting with the car’s touch screen for more than a brief instant, it will make a loud sound and display a “please pay attention” message. If the driver doesn’t immediately heed this alert, they are given a “strike” which locks them out of the software until the next drive, and repeated strikes result in automatic expulsion from the beta testing program. I’ve never received a strike, but others who have received them (or been expelled) have posted images of messages like this:

Tesla makes no promise or guarantee that the FSD Beta will avoid all obstacles, including pedestrians. In fact, part of the agreement that all beta testers must accept says that the beta software “may do the wrong thing at the worst time”. It implores them to be vigilant and to not let the car make mistakes. One of the several messages shown to beta testers before they can use the beta “FSD” mode is shown here:

Now, there is a very real and legitimate concern about whether all drivers enrolled in the program are up to this task. O’Dowd doesn’t seem interested in this concern, though. For Tesla’s part, their mitigation for this concern (beyond the Driver Monitoring System mentioned above) is to require that any beta testers explicitly request access to the program, accept strict terms of use, then demonstrate both a high degree of driving safety and an ability to comply with instructions through their “Safety Score” program before they will be invited to join. Initially, only drivers with a perfect 100 out of 100 score were admitted to the program, followed soon after by those with a 99 out of 100 (which is the score I had maintained when I was added). Over the past year or so, they have reduced the minimum score to 90 out of 100, and I get nervous every time they do so. More on that in a later post.

In this way alone, O’Dowd’s claims do not hold much water. The fact that the beta can make mistakes if not managed by an attentive driver is not news, nor surprising, nor itself a problem. At the very least, this is the case for all L2 driver assistance systems, and singling out Tesla’s “FSD Beta” in this regard is unjustified.

In an upcoming post I’ll discuss more about the challenges facing regulators and industry groups like the SAE with highly advanced level 2 functionality, and how to ensure drivers understand and operate such systems safely. There are important, complex, and nuanced conversations to be had about this. It’s a new frontier, and balancing various trade-offs involved is not a simple matter - it’s a situation where reasonable people may disagree.

It is also perfectly reasonable to have concerns about the notion of volunteers operating “beta” vehicle control software on public roads. I don’t think anyone can argue that Tesla isn’t pushing some boundaries here. Unfortunately, O’Dowd doesn’t seem interested in any of those conversations, nor does he seem interested in learning enough about the subject matter to productively contribute to the discourse.

For now, let’s put all that aside and instead focus on O’Dowd’s claims about the software’s behavior. After all, it sure seems like this is a fairly straightforward scenario which the software ought to handle well enough in most cases – right? So how could it fail so badly in all of O’Dowd’s test runs?

Well for starters, we don’t actually know that it did fail all of the tests. O’Dowd does not share how many test runs were conducted. Instead, he only shares video of three runs which appear to fail the test. His signed affidavits (the existence of which is itself… odd), are cleverly worded to avoid making statements about how many tests were conducted, and in particular to avoid saying that only three we conducted. Instead, they simply claim that “three of the tests” resulted in the vehicle striking the mannequin. Given O’Dowd’s history and demonstrated lack of objectivity in this matter, it seems very likely that O’Dowd and his crew performed more than three tests. I would guess many more. He has yet to respond to my inquiries about this.

When looking at his PDF, the first thing that struck me is that the document is quick to describe what it covers as a “scientific test” multiple times. If you’ve read a lot of peer-reviewed papers or professional test reports, you probably know that real scientific research generally doesn’t describe itself this way. Indeed, this is marketing language, not the language of science. This is a theme throughout the document, with misuse of words like “conclusively”, a distinct lack of any kind of hypothesis or baselining, and a general failure to follow anything like the scientific method or industry best practices.

The document claims that the test methodology is designed to simulate “a realistic life-and-death situation in which everyday motorists frequently find themselves: a small child walking across the road in a crosswalk.” However, the methodology used doesn’t simulate this scenario at all, instead simulating a perfectly still child standing in the middle of a cone-lined pathway on a racetrack. There is no attempt here to define a crosswalk (marked or otherwise), so it is confusing that Dan would mention one in the description of what they were attempting to simulate.

In fact, the closest real-world scenario that this might be simulating is a child standing in the middle of a construction zone on a highway (where vehicles are traveling at 40 MPH in the construction zone). While this is of course a possibility (especially if you put aside the part about them standing impossibly still), it certainly isn’t a situation everyday motorists frequently find themselves in. Indeed, it’s an inherently dangerous situation that should be exceptionally rare. It’s also a situation where Tesla explicitly warns drivers to take extreme caution or even to disable the system proactively.

Next, the document explains how the driver accelerated the vehicle to 40 MPH before enabling the “FSD” mode. Since this isn’t on a supported mapped street, and also because no destination is entered (so the car doesn’t actually know where it’s supposed to try and go), this isn’t really a test of the “FSD” mode – at least not as it’s intended to operate. Instead, it’s a sort of reduced functionality mode using the same software, but basically an Adaptive Cruise Control + Lane Keep Assist mode.

The driver’s actions result in setting the cruise control speed to 40 MPH, which the car will try to maintain when it doesn’t perceive a need to slow down. The document says that the driver then proceeds with their hands off the wheel (violating the rules of the beta program) for the remainder of the test. The document also says that the car would “start to stagger as if lost and confused, slow down a little, and then speed back up as it hit and ran over the mannequins” – which, if true, sounds like a reaction to the very weird situation it’s been put in. I say “if true”, because none of this is shown in the provided videos.

So what’s going on here? Let’s look at the video to see if it can shed any light on what was observed.

The video linked from the document shows three test runs – presumably the same three described in the document. Right away it’s apparent that the document does not describe the observations completely or correctly. In each of the three recorded test runs, we are shown a clip from the interior of the vehicle - however, not at the start of the test. Instead, it jumps straight to the end. The interior video from the first test begins with the vehicle approaching the mannequin at 37 MPH.

Note that the car clearly sees the cones as well as some parked cars and a person off on the right. It’s also determined that the cones are meant to establish three lanes of travel (depicted by the lines on the road in the Autopilot visualization). At this moment, the car does not indicate that it sees a person or obstacle in front – however, it’s important to note that the car does not visualize all obstacles that it sees, especially at farther distances.

A couple of seconds later, the car is at 38 MPH, and we see that it does depict the mannequin as a person directly ahead, as seen here (it is hard to make out due to the poor quality of the video O’Dowd shared):

Following this, the car begins decelerating, and immediately triggers a Forward Collison Warning and a “take over immediately” message, with alarms blaring, alerting the driver that something is wrong and it needs help. This is accompanied by a large red steering wheel (with hands on it) displayed on the screen, and it is the most severe alert that Autopilot/FSD gives. The appearance of this message means Autopilot is deactivating and going into its failsafe mode - which stops the vehicle with the hazard lights on.

It’s difficult to see the exact moment of impact, but based on the video it appears the car has slowed itself to approximately 16 MPH at the time it hits the mannequin. This does not match what is written in O’Dowd’s document, but we can see that it is the case by stepping through the video frame by frame.

After hitting the mannequin, the video shows the car bringing itself to a stop with the hazard lights enabled:

So why is none of this mentioned in O’Dowd’s document, web page, or advertisement? Perhaps because this doesn’t match the story he wants to tell.

In the second video, the view of the screen is out of focus, making it difficult to read the speed and certain other details. However, this clip shows more of the lower part of the screen, which lets us see that O’Dowd has been hiding something: an error or warning message the car is displaying to the driver:

If you aren’t familiar with Tesla’s user interface, you might not notice. However, the dark rounded rectangle in the lower left of the above image is the message I am referring to. Another thing we can see here is that either the FSD software or the driver has initiated the right turn signal, and the car is planning to move over to what it perceives as the right lane when it finds a break in the cones.

The blurriness makes it hard to see, but it does appear that the display depicts the mannequin in the vehicle’s path again in this test run:

This time, there’s no “take over immediately” alarm and the car does not put on the hazards and stop. Instead, it seems to slow down and try to go around the obstacle, though it appears it fails to do so. Meanwhile, the error or warning message is constantly on the screen. This could be indicating a malfunction, an obstructed camera view, or that “Full Self Driving capability is degraded, use additional caution”. Another possible message that appears in this way is “Accelerator pedal pressed - Cruise control will not brake”. Presumably that one isn’t relevant here, since O’Dowd promises the driver did not touch the pedals. Unfortunately, O’Dowd doesn’t seem interested in telling us what the message said, despite inquiries via Twitter.

Once again, what’s shown in the video does not match the description in the document. The vehicle doesn’t speed up after striking the mannequin, and instead seems to come to a near stop. It almost looks like the video has been slowed down at this point which makes it harder to gauge the vehicle speed very precisely, but O’Dowd claims the video is “raw footage” and “unedited” (of course it obviously is edited at least enough to put together the various camera angles and leave off the parts we don’t see). Without a clearer view of the speedometer, it’s hard to say exactly how fast it was going during and after the impact in this run.

The third test run shown goes much the same as the second. The car again seems to want to go around the obstacle by steering to the right, but the cones prevent it from doing so successfully. And again, the unexplained error/warning message is displayed on the screen the entire time.

So to recap:

In the first test shown in the video, the car detected the mannequin as a person and panicked, slowing to a stop and alerting the driver it needs help

In the second and third tests shown in the video, the car seems to be constantly warning the driver about a malfunction or other issue, which the driver is ignoring

We have no idea how many tests were conducted, nor how many parameters had to be altered before these three results (clearly desired by the practitioners) were achieved. This is not how you do science

O’Dowd claimed in his web site and in his advertisement that “[the] software does not avoid the child or even slow down when a child-sized mannequin is in plain view”, and yet the video shows the vehicle did slow down in all three tests (and the PDF document also states this! Though it disagrees with the video about the speeds)

None of these tests involves anything the car can detect as an intersection or crosswalk, despite the claimed objective of the test

Because this is not a mapped road, the software is operating outside of its intended operational design domain (mapped city streets), and thus its behavior is not well-defined

Because no destination is set, the car is not operating in the true “FSD” mode, and behavior in this configuration is known by testers to be less than ideal

The use of cones to outline the “lane” boundaries, combined with the 40mph test speed, simulates a highway construction zone - an exceptionally rare situation for a child to be present in the road, and a situation where Tesla explicitly tells drivers to be extremely cautious or to not operate the software at all

In all tests, the mannequin is placed in the exact center of the vehicles path, with cones on either side, leaving insufficient room to go around it

Unlike in most professional tests (e.g., by the IIHS), the mannequin is completely stationary. This is also of course unlike any real-life human child who could never be this still. This very likely contributes to the software being uncertain about what is in front of it. Since it is not a person, it’s arguably correct for it to be uncertain about what it is, which makes it a less effective test of a real-world scenario

I don’t want to accuse Dan of cheating without evidence, but given the several misrepresentations identified above, this is also a possibility. For example, we do not know if the vehicle was modified or deliberately handicapped – e.g., by placing an obstruction over the camera housing in a manner which obscures a very particular portion of its field of view

One other possibility which I alluded to earlier, and would fall under truly overt cheating (and mean the driver lied in their affidavit) is that the warning message displayed on the screen is actually the one shown when the accelerator is pressed while in cruise control / Autopilot / “FSD”… the message which says “Accelerator pedal pressed - cruise control will not brake”. I hesitated to even mention this, but given all the other indications of dishonesty here, we cannot rule it out

Despite all of that, would it be good for Tesla to investigate this scenario to try and reproduce these results? Of course. Are Dan’s claims an accurate reflection of the current “FSD Beta” functionality in a real-world situation? Not at all.

It is true that the “FSD Beta” is a work in progress and requires a vigilant driver to oversee it, and it can and does make mistakes sometimes. Beta testers also need to understand its quirks and limitations, and they must commit to operating it safely. Industry experts, regulators, and standards bodies also have a lot to figure out about the whole situation with ADAS systems, especially advanced L2 offerings and “beta” programs on public roads.

However, it seems clear to me that O’Dowd set out to make a particular advertisement, and then took whatever steps were needed to produce it - even if it meant contriving his evidence, cherry-picking or misrepresenting results, and generally deceiving viewers.

In the next article, I’ll look at a claim made by Taylor Ogan which on the surface seems similar, but turns out to be about entirely different software in an entirely different situation, and which in some ways may even challenge O’Dowd’s claim for its dishonesty. That article is now available here.

Update / Addendum:

Something kept bugging me after I posted this: why have the cones? After all, without them this section of racetrack is just like a residential road with no cars parked along the sides. Why not just point the car at the mannequin and see what it does? I’ve come to strongly suspect that they tried this, and discovered that the car kept avoiding the mannequin, probably by slowing and then going around it. They may have tried a variety of positions, and still failed to achieve their desired result.

So they kept trying, and eventually discovered that cones make Autopilot nervous, and restrict its movements substantially. It always tries to get away from them. Indeed, even many non-beta owners are likely familiar with the “changing lanes away from cones” message. In the current beta build, this aversion to cones is overly strong - to the point that Tesla says this is improved in the next beta version.

With a line of cones on both sides, it can’t get away from them by changing lanes, so it usually gets very aggressive about asking the driver for help. I’ve seen this at construction zones, and I always take over in those situations because of this. I suspect O’Dowd and crew experimented until they discovered this weakness, and then ran their test in a situation where any responsible driver would be heeding the system’s pleas for help and taking over.

I also have been wondering why they chose 40mph, as that’s quite a high speed for such a test. Perhaps they started with a slower cruise speed, and again couldn’t get the result they wanted. If O’Dowd wants to come clean about this whole thing, he could start by telling us how many tests they conducted and how the system performed in the others.