A Brief History of Tesla's "Full Self Driving"

A look back at the FSD promise and reality, from 2014 to March 2023

Among a variety of controversies (some justified, some not) surrounding Tesla and its CEO, one of the most recognizable is the matter of their “Full Self-Driving" offering. Depending on whom you listen to, you may have heard that it is best thing since sliced bread and future of the automotive industry, a useful feature every driver should have, a gimmick, vaporware, questionable, irresponsible, or a deadly menace. If you ask me, it’s a little bit of several of those. In short: it’s complicated.

I’ve been wanting to write a piece about what I think Tesla should do to improve the situation – aiming to strike a balance between doing right by customers and doing the best thing for Tesla’s long-term business interests. However, I thought it would be useful to first take a look back at how we got where we are today. My look ahead will follow in the coming days.

Even if you are already familiar with this topic, I hope this article will provide a helpful refresher to contextualize the suggestions I’ll be making in the next piece. I also intend to use this as a reference I can point people to if they want to learn more.

2014

Let’s hop in our Delorean and travel back to a far-off era when the “Ice Bucket Challenge” had recently gone viral, and there were still only 6 live-action Star Wars films.

Tesla was already around ten years old at this point, having been founded in 2003 – initially by Martin Eberhard and Marc Tarpenning, though the duo were immediately joined by Ian Wright, Elon Musk, and J.B. Straubel before the company began designing cars or even hiring employees. For a good overview of the company’s overall history, I recommend checking out the History of Tesla page on Wikipedia.

Despite having been around for a decade, Tesla in 2014 was still a very early stage, upstart new auto manufacturer. They’d made a small splash with their first product, the Tesla Roadster, and were making a bigger one with their first completely original design, the Model S (introduced in 2012). The company’s journey was anything but smooth sailing – yet they pursued their mission relentlessly, managing to beat seemingly impossible odds at almost every turn.

In October 2014, at an event introducing the new dual-motor “D” variant of the Model S, Elon Musk surprised attendees by announcing the introduction of the “Autopilot” suite - an Advanced Driver Assistance System (ADAS) which would provide new active safety and convenience features. At the time, Musk said that there were “not enough safety redundancies in place” yet to enable autonomous functionality, but he began painting autonomy as Tesla’s ultimate ambition.

The actual features introduced in 2014 are pretty boring by today’s standards. Lane Departure Warning and a speed limit warning (to optionally indicate visually or audibly if you were going faster than the speed limit) were the first “Autopilot” capabilities to be introduced. Of course, more functionality was promised to be on the way. At the time, Tesla’s official statement said:

Our goal with the introduction of this new hardware and software is not to enable driverless cars, which are still years away from becoming a reality. Our system is called Autopilot because it's similar to systems that pilots use to increase comfort and safety when conditions are clear. Tesla's Autopilot is a way to relieve drivers of the most boring and potentially dangerous aspects of road travel – but the driver is still responsible for, and ultimately in control of, the car.

(source)

2015

Features you more likely associated with the “Autopilot” name, like Traffic Aware Cruise Control (“TACC”) and Autosteer, arrived via a software update in 2015. TACC is Tesla’s name for their adaptive cruise control mode, intended to ease highway and stop-and-go driving by adjusting the car’s speed to keep a consistent, safe distance from the car ahead of you. Autosteer is Tesla’s name for their SAE “Level 2” partial automation mode, combining TACC with automated steering to follow the road and stay centered in its lane. This is what people most often refer to as “Autopilot”, even though the official name, including how it is labeled in the car, is Autosteer.

This initial version wasn’t really a Tesla-developed technology, though they were responsible for how it was expressed in terms of specific features and user interface elements. The underlying functionality was provided by hardware and software from MobilEye – one of the major ADAS manufacturers then and now, and who was later acquired by Intel. The system relied upon a single forward-facing camera, a Bosch mid-range forward-facing radar, and a set of 12 short range ultrasonic sensors (USS) around the vehicle.

2016-2017

In 2016, Tesla ditched MobilEye (or was ditched by them, depending on whom you ask) in favor of a homegrown solution which would become known as Autopilot 2.0. This came with new sensors (a new radar, 8 exterior cameras, plus ultrasonic sensors) as well as new compute hardware (using a customized Nvidia solution) and custom Tesla-developed software. Initially, AP 2.0 had fewer features than AP 1.0, and years later a small but persistent group of customers would still swear that the 1.0 system was in fact better in particular ways.

It was around the time AP 2.0 was introduced that Musk began talking about “Full Self Driving”. He claimed that this new suite of hardware would be sufficient to enable autonomous driving eventually. What’s more, FSD would soon become available for pre-order – an optional purchase which would yield zero immediately available functionality, but the promise of it to come later.

The “Paint It Black” video

It was at this time that Musk and Tesla released the infamous “Paint It Black” video, purporting to show a Tesla vehicle driving on its own along a typical route including both highway and non-highway roads. As with so many things here, interpretations of how this video was produced and presented vary. Many of us who’ve followed this industry for a while understood this as a proof-of-concept, not something that was nearing release. From what we know, the vehicle was indeed operating using the Autopilot system, and the video was not “faked” as some have claimed. However, it did require several takes to successfully complete the route as shown in the video, and the software powering it was heavily tailored to making this specific route work in relatively controlled circumstances.

I call what was shown in that video “demoware”, and this kind of thing isn’t particularly uncommon when it comes to early tech announcements. Even in the automotive industry, companies like MobilEye and Nvidia have released several videos which aren’t all that different from this. For example, this Nvidia video was released the same year. It appears to show an autonomous vehicle going from unusable in February 2016 to having a “driver” playing on his phone after just one month and “3,000 miles of learning”. At that rate of improvement, surely we’d all be driving Nvidia-powered autonomous vehicles within the year! Right?

Audi even ran a super bowl commercial (featuring two Spock actors) which ended with a purportedly self-driving vehicle appearing to drive on its own, a full three years prior. For some reason, they don’t seem to get any flack for that one. In 2016, the industry was full of ambitious visions and promises that autonomous vehicles were right around the corner. In this context, Tesla was brash, but far from alone in suggesting that an autonomous vehicle revolution was imminent.

However, some argue that Tesla went too far in painting this as being farther along than it was, potentially misleading both customers and investors. Whether you agree with this, and whether you interpret it as intentionally (even criminally) deceptive, or just as a fairly typical “look at this amazing future thing we’re building” marketing stunt, or as an example of characteristic overconfidence and naivety - that’s ultimately a subjective matter. I’ve generally given Tesla the benefit of the doubt on this one, but I understand why not everyone does that.

While researching this piece, I did find that contemporary coverage of the video from 2016 seems to show that it was indeed presented and interpreted as a preview of something that would be “some way off in the future”.

That didn’t stop unscrupulous publications from writing deceptive articles and headlines like this incredibly misleading MotorTrend piece published in 2021:

No doubt the author will defend that piece because of the “former employee alleges” qualifier. However, if you read the article, it becomes clear that their source didn’t actually say what they’re implying with the headline and lede. Here’s the only explanation they give for this claim that the video was “faked”:

I hope that the absurdity of calling such a thing “faked” based on this explanation is obvious. Tesla in fact pursued an approach which is referred to by some in the Tesla hacking community as “Autopilot on rails”, which relied on detailed map data to operate, in their early attempts to build “FSD”. They later scrapped this approach and worked their way to the solution they’re deploying today - which still (rightly) uses maps!

Enhanced Autopilot and the FSD package

With 2016’s Autopilot 2.0 release came the brand name “Enhanced Autopilot”, which included a variety of features (including TACC, Autosteer, Summon, and AutoPark). This was the thing you could buy and receive immediately (or at least relatively soon), whereas the “FSD Capability” package was squarely a pre-order (and required that you also buy Enhanced AP). Tesla provided no timeline for when the “FSD” functionality would actually be delivered. Presumably, though, buyers would reasonably expect that it would come during the vehicle’s usable lifetime (and perhaps even more importantly, during their ownership of the car). Uptake of this option was likely quite small. One reason is that Tesla promised you could buy it later (but that it might be more expensive), hence their marketing claims that “every Tesla includes the hardware necessary to support FSD”.

I doubt many customers were willing to pay thousands of dollars for such a vague promise. However, presumably some did, and I believe Musk and Tesla further encouraged fans to buy the FSD option as a sort of “Kickstarter”-like program, through which they could help Tesla fund the team to complete the work faster.

The “FSD” offering remained available in this purely pre-order form for a few years, with only minor changes in wording and pricing occurring from time to time.

2018

In 2018, Tesla had reached a reasonably good place regarding the performance of their TACC and Autosteer features – intended primarily for use on interstates and other restricted access highways. Around this time, they began developing and testing a variety of capabilities needed to support other kinds of roads – such as stop sign and traffic light recognition. However, development of these capabilities seemed to stall out, and their attention moved elsewhere.

They soon began testing a new highway-focused feature with some customers in a special Early Access program. This functionality, eventually designated “Navigate On Autopilot” (or “NOA”), enabled Autosteer to follow a route chosen by the car’s navigation system along supported pre-mapped highways. When enabled, this mode can automatically take exits and interchanges, as well as suggest lane changes - either to pass slower traffic, or to be in the correct lane to take an upcoming exit. If confirmed by the driver, the car then executes the lane change automatically.

This feature eventually rolled out in late 2018 to all owners with the Enhanced Autopilot package (which included any “FSD” buyers). It also gained an option to allow the driver to turn off the lane change confirmations and have them executed automatically – though this is only sort of true as even today the car will alert the driver and insist they give some input to the steering wheel to acknowledge that they are ready to take over if needed before it will begin the actual lane change. This functionality has remained largely unchanged since late 2018 (though some implementation details have changed).

Owners tend to express mixed feelings about the functionality of Navigate on Autopilot. The off-ramp and interchange behavior can be hit or miss, and is particularly sensitive to changes in road layouts. In general, when the map data is correct, it works rather well in my experience. But if an exit is closed or relocated, it can get confused. Temporary closures and detours, even if “temporary” means multiple years, are generally never incorporated into the maps. Even for permanent changes, it sometimes takes years before they’re included in updated map data.

The other NOA functionality, where it suggests or initiates lane changes to pass slower vehicles or to follow the navigation route while on a supported highway, tends to be more trouble than it’s worth. It often suggests lane changes that aren’t necessary or are premature, and just generally takes too long to initiate them, especially if you’re in the so-called “no confirmations” mode (counterintuitive though this may be). I use it sometimes, but often deactivate it and just stick to regular Autosteer with the wonderful Automatic Lane Change feature (where I activate a turn signal and the car finds a gap and moves into it).

2019

The Retcon

“Hold my beer.” - Elon Musk, presumably

Musk said something many of us found very controversial in early 2019 when, during an earnings call, he stated that “Full Self-Driving Capability is there”, apparently referring to Navigate On Autopilot as the highway portion of the promised FSD solution. He then stated they’d be working on the off-highway portion next.

This was concerning, as the Navigate On Autopilot functionality, while novel and relatively impressive at the time, was not at all “full self-driving”. Just like with regular Autosteer, it required the driver to constantly pay attention, keep their hands on the wheel (and eyes on the road), and to be ready to take over without warning. That’s fine for “Level 2” functionality, but Musk had pitched FSD as “Level 5” – and even a charitable interpretation of the promised functionality would require a Level 3 solution at minimum. Further, this feature was given to all Enhanced Autopilot buyers, so there was still no value being given to FSD buyers for their money.

That same year, Tesla made what I think was the biggest mistake they’ve made in all of this, by eliminating the Enhanced Autopilot package. In doing so, they took some of its functionality (TACC and a basic version of Autosteer) and included it with all new vehicles – referring to as “Basic Autopilot”. This part I was fine with.

However, the rest of the Enhanced AP functionality – Navigate On Autopilot, Summon, and AutoPark, were now only available via the Full-Self Driving package. They also updated the FSD marketing language to suggest that the only yet-to-be-released FSD features consisted of “Traffic Light & Stop Sign Control”, and “Autosteer on city streets”. I described this at the time as Tesla “retconning” the definition of FSD - such that it now included some preexisting Level 2 ADAS functionality.

This was troubling for two main reasons:

1) It presented L2 functionality under a name which could mislead people into thinking the system was more capable than it was

2) It raised very real concerns that FSD may never offer functionality beyond SAE Level 2, and thus failing to achieve what was promised

Ever since its introduction, Tesla was subjected to a lot of criticism over the Autopilot name. Personally, I always thought it was a perfectly good brand for an ADAS. It is both a correct analog of aircraft auto pilots (which do not make airplanes autonomous, and originally were little more than cruise control), and is a good marketing name which kind of conveys what it does while leaving room for them to define it around the product truth of an L2 system.

However, it was maddening to me that in the midst of this controversy, Tesla would then begin selling some of the very same L2 functionality under the name “Full-Self Driving”. There’s no ambiguity in that name. No room to define it as anything other than an autonomous solution. Calling an L2 mode “self driving” would be bad enough, but when you add full to the name, you’re leaving absolutely no doubt that you mean every expectation a person could reasonably have. I saw this as Tesla hearing the feedback and saying, “you think Autopilot is a confusing name? Hold my beer.”

I liken calling an L2 system “full self-driving” to calling a 480p television set “Full HD”. It’s not Full HD. It’s not even HD. The entire reason the “Full HD” terminology came about was because “High Definition” included 720p, 1080i, and 1080p resolutions. This is somewhat analogous to the SAE J3016 automated driving levels. Level 2 is like 480p - the highest “standard definition” level that isn’t at all autonomous. Level 3 is like 720p - it’s the entry level autonomous mode, also called “conditional autonomy”. It means that in certain favorable circumstances, the car can operate without direct supervision. However, a driver must still be present and ready to take over if/when the car requests them to - whenever it is going to leave those supported conditions. Level 4 is where you get to full autonomy, or what a reasonable person could call “full self-driving”. It’s the level at which robotaxis become a thing and where cars without steering wheels (or yokes) are possible. There is a Level 5, but it is poorly defined and for most intents and purposes can be ignored. Nobody is building or testing a Level 5 solution today.

Why did Tesla make this change? I suspect it was a combination of two things. First, they recognized that FSD buyers hadn’t gotten anything for their money, and delivering on the promise was taking much longer than they (or at least, Musk) ever imagined it would. Second, they had a pile of deferred revenue associated with FSD purchases sitting around. Now they could perhaps say that some of that deferred revenue was for these retconned-as-FSD features, and start tapping into that when they need to boost their earnings numbers in a rough quarter.

Now, we did not see them recognize a lot of this deferred revenue back then, and any conspiracy theories that tricks like this are how Tesla reports its huge profits are unfounded. However, the first quarter of 2019 was a tough one for Tesla, with revenue down due to “logistics hell”, with a ton of cars sitting on boats to Europe when the quarter ended. This hammered the stock price, and they may have wanted to find ways to “smooth” the line on the revenue chart as they went from quarter to quarter. This is all speculation and inference on my part, but it’s the best explanation I can think of for making the changes they made.

One other possible explanation is just that they thought they could get all the people who would have bought Enhanced AP to pay them more when buying a new car. Basically, a stealth-ish price increase for Enhanced AP features – which, to many of us, are very desirable!

Whatever the motivation, I believe this was a terribly misguided change. It made criticism of Tesla’s naming and marketing far more reasonable, and it undermined the trust of some owners who felt the company was papering over their earlier promises. In June 2022, Tesla would reverse course on this and reintroduce Enhanced Autopilot - retconning the retcon, as it were.

Autonomy Day (April 2019)

One of the key milestones in the FSD journey was a special event Tesla hosted in April of 2019, dubbed “Autonomy Day” (video here). Tesla invited a variety of investors and analysts to come hear about the company’s progress in solving autonomous driving. The event included numerous presentations, many of which had appreciable technical depth, as well as a lot of grand aspirational statements and “visioneering”, particularly by Musk. Some attendees were given drives in vehicles equipped with development builds of the “FSD” tech, though details about these demonstrations were relatively scarce.

During this event, Tesla unveiled their new “Full Self Driving Computer” – an impressive, Tesla-designed computer using custom silicon. The chip at the heart of this new computer was designed by Tesla’s hardware engineers under the leadership of Pete Bannon – whose impressive resume includes co-developing Apple’s A5 chip after coming there as part of the PA Semiconductor acquisition. The computer Tesla introduced has two of these “FSD chips” – which are custom ARM SoCs which each contain two specialized neural network accelerator units. Despite all the confusion around FSD, it was clear from this development that Tesla wasn’t messing around.

Tesla even began retrofitting this new computer into existing vehicles. This would upgrade existing Autopilot 2.0 (and 2.5) vehicles to a new “Hardware 3.0” spec (often referred to as HW3 for short). The upgrade could be performed at any Tesla service center, and was free for customers who had paid for “FSD”. They also transitioned over a few months to having all newly produced vehicles include this hardware, regardless of whether “FSD” was purchased.

At one point during the presentation, Musk seems to go off-script and reveals that Tesla had already started work on the next generation of their “FSD chip” (part of what we’d start to call “HW4”). He says, “we’re not talking about the next generation today, but we’re about halfway through it”, and “all the things that are obvious for a next-generation chip we are doing”.

An interesting moment occurred shortly after that when an audience member asked about the objective for the next-generation chip. Musk starts to answer saying “we don’t want to talk too much about the next-generation chip but it’s, um…” and pauses for several seconds. During this pause, Bannon can be heard saying in a hushed voice to Musk (as if to feed him a line, and thinking his mic is off), “safety…”. Musk ignores this and just says it will be “at least three times better” and leaves it at that.

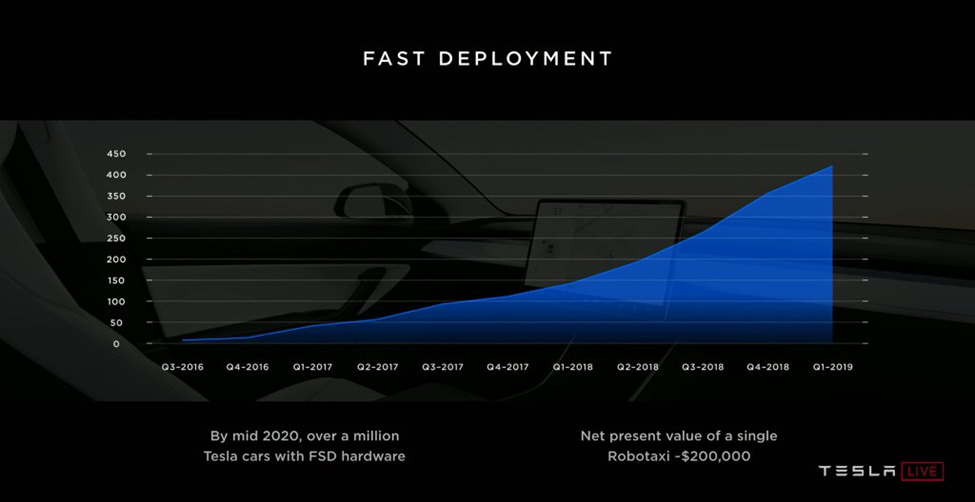

Throughout the event, Musk made a variety of bold and sometimes inconsistent claims. During a discussion of the FSD Computer, Musk made a statement that “by a year from now we'll have over a million cars with the Full Self-Driving computer”, and again later reinforcing his belief that this would mean a million cars ready to become a “robotaxi” with just a software update. Note that these statements were carefully crafted to not say that the software would be available in a year, just that a million vehicles would have the FSD Computer (and that this would be sufficient hardware for a “robotaxi”) in that timeframe. This is almost certainly a goal which they did achieve.

This was reiterated later in the presentation, and reflected in the text on this slide:

However, Musk couldn’t help but go a bit further than what I suspect was scripted, saying “we expect the first operational robotaxis next year - with no one in them”. Depending on how you interpret this, that could mean he thought they would be testing a small number of robotaxis without passengers, or it could mean actual robotaxis with passengers but no driver. Parsing these kinds of Musk statements is its own art form, and reasonable people may come away with different understandings of them.

Since he said “next year”, I interpreted this as meaning by the end of 2020 (rather than “a year from now”, which would have been spring 2020). To be fair, Musk prefaced this part of his presentation by saying that he is usually wrong about how long things will take, making a self-deprecating joke and stating that things often take longer than he wants, “but I get it done. And the team at Tesla gets it done”. You’d be forgiven for thinking he’d perhaps learn to just not make such statements or to at least pad his estimates a bit. You’d also probably not want to hold your breath.

Between the earlier statement and this one, many media outlets started reporting that Musk claimed there would be one million robotaxis on roads within a year (example). Rather than correcting the record, though, Musk further muddied the waters. Going from the somewhat reasonable, nuanced claim about vehicles with their new hardware, and heavily qualified and somewhat vague claims about “first operational robo taxis”, to actually claiming that robotaxis would be available in roughly that timeframe. In a normal company, there’d be a PR department tasked with addressing these issues. But Tesla isn’t a normal company, and in 2020 they went from having virtually no PR department to having literally no PR department.

One effect of Autonomy Day was to keep hope alive among fans, despite the retcon and other reasons to be concerned about “FSD” being vaporware. I took what I considered to be a balanced view… autonomy was still a ways away, but Tesla was serious about making it happen, even if it was going to take a while to get there, and we’d gain incremental benefits from their efforts along the way. The precedent set by retrofitting the “FSD Computer” into existing vehicles would also give hope that future hardware updates would also be made available to FSD buyers.

2020

The following year, amidst the COVID pandemic, Tesla began rolling out the first non-retconned feature specific to the FSD package – called Traffic Light & Stop Sign Control. This enabled the car, while in TACC or Autosteer mode, to recognize traffic lights and stop signs and, well, stop at them. It was made available as a “beta” or “preview” feature, though technically Autosteer itself was and is still labeled as a “beta” feature (hence my calling it a “beta within a beta”). This feature didn’t just stop at red traffic lights, though. It was designed to stop at all of them. The driver had to confirm that they wanted to proceed through a green, either by tapping the accelerator or using the Autopilot stalk/button. I found this to be a reasonably clever way to start the functionality off with a safety-first posture. They could validate that the system was correctly identifying the color (and applicability) of a traffic light before allowing the car to proceed on its own.

In fact, this is largely still the case today when this feature is enabled by an FSD owner. They did eventually change it to proceed through green lights if it sees another car also go through. Generally, this means that if you are following someone, it will go through the intersection on its own. Otherwise, the car will stop itself even at green lights if the driver doesn’t actively confirm that it’s safe to proceed. Personally, I found this to work well in combination with Traffic Aware Cruise Control. However, I was concerned that its introduction would encourage more drivers to activate Autosteer in non-highway situations, where it really wasn’t designed to work.

The FSD Beta arrives

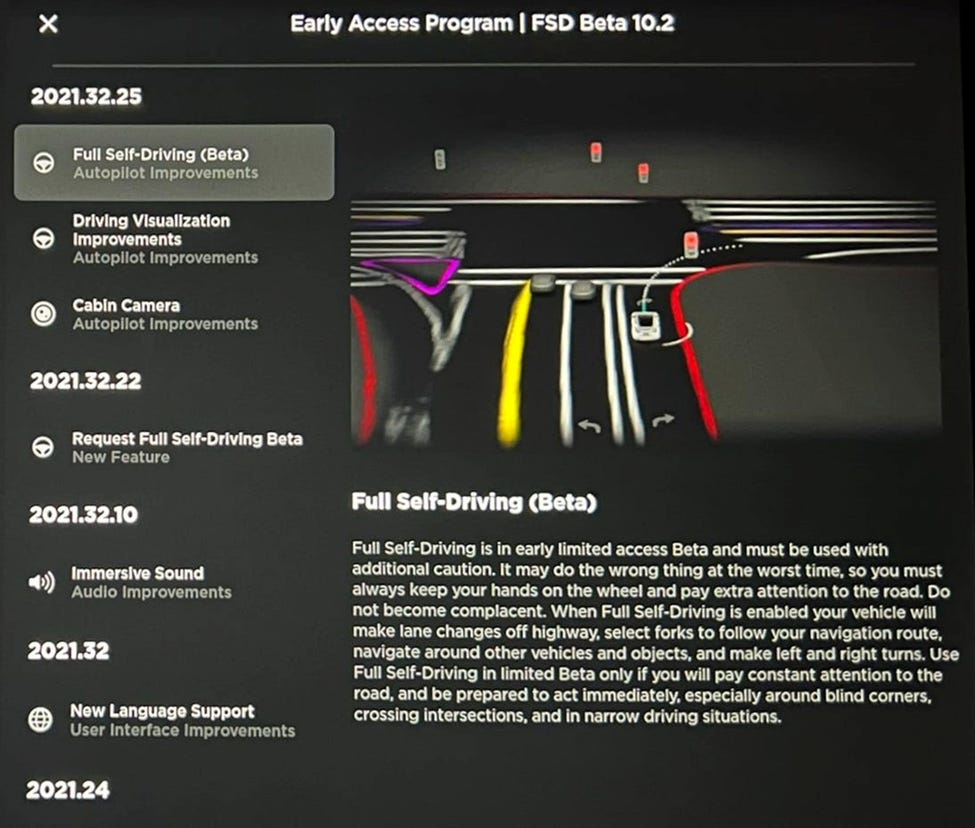

Later in 2020, they introduced the “FSD Beta” program. This was a big deal. The system was clearly a huge step forward from what they were doing before, and customers in this program could try out the “Autosteer on city streets” feature, which really does the vast majority of driving tasks on any non-highway route. It will stop at traffic lights (and go on green), it will take turns, it will change lanes with no confirmation, it will go around stopped cars, it will wait for pedestrians, and so on. Watching the first YouTube videos of the first beta testers trying out the software was truly mind-blowing to see. Using it was similarly astounding, but also mildly terrifying. Tesla warned drivers in the beta program to “not become complacent” and said “it may do the wrong thing at the worst time”.

A message shown to FSD Beta drivers since 2020 (screenshot is from a 2021 build)

One of Tesla’s strengths has been in viral marketing and leveraging their fanbase to promote their brand and products. It’s thus not surprising that the initial FSD Beta rollout consisted of an audience of enthusiastic fans with Tesla-heavy social media presences. This worked incredibly well from a marketing standpoint, with dozens of these influencers producing free Tesla advertisements seen by countless viewers, all showing a truly remarkable and magical offering that couldn’t be found anywhere else.

An early look at the FSD Beta, from this video by YouTuber AI DRIVR

Of course, the beta also had a lot of rough edges. While these fan videos tended to be largely positive in terms of the impressions shared by the owners making them, every little mistake made by the system became a “gotcha” example for those inclined to criticize Tesla. Some of this criticism was reasonable, particularly when it came to questioning whether it was wise to rely on untrained owners to operate an in-development system safely. Others, however, took these as opportunities to misrepresent and sensationalize the situation. Some would suggest that Tesla was billing this as an autonomous system, painting every intervention or disengagement as a dangerous failure or malfunction (rather than as an expected occurrence for an L2 and “beta” system). Others would create montages of cases where the system struggled and needed help, and made the system look far less capable and reliable than it was.

The result of this was that many fans would produce videos making the system look more capable and reliable than it was, while anti-fans would present the other extreme. The reality of using the FSD Beta is somewhere in between.

2021-2022

In 2021, Tesla made another controversial change and removed the radar from their US models. Why do this? The most logical explanation supported by the evidence is that they encountered supply shortages of the radar units they were using, and couldn’t get enough of them for all of their cars. Making fewer cars is never an option for Tesla, given the expectations that exist around their growth. And making cars without the Autopilot hardware would surely be anathema to Musk.

It just so happened that the new computer vision technology they’d been developing as part of the ”FSD Beta” was getting good enough that it could generally do a sufficient job (and perhaps in some cases a better one) versus the radar, and so they had a viable alternative. The radar they were using was getting pretty dated, too, and they had constantly struggled with issues related to how they would map low resolution and sometimes intermittent radar returns onto the vision-based prediction of the car’s own path. Some customers believe that this switch helped reduce some “phantom braking” occurrences attributed to this problem. For a time, though, it seemed to make the problem worse.

Removal of the radar came with sacrifices. For a while, some functionality was missing or reduced on radarless cars. Even today, the maximum speed is lower and maximum follow distance is farther than it previously was on radar-enabled vehicles. And in certain situations, like heavy rain or fog, the system now has to warn the driver and reduce its functionality more so than it did in the past.

Even ahead of this removal, a certain “Tesla hacker”, well-known within the Tesla enthusiast community, identified clues in the released software indicating that Tesla was testing out a new radar solution - apparently identified in the code by the name “Phoenix”. This led many to believe Tesla was experimenting with Arbe Robotics’ Pheonix imaging radar.

When discussing the removal of the HW3 radar, Musk made sure not to say that all radar was useless, instead using the phrase “coarse radar” to discuss what was being removed, and later saying that “only very high resolution radar is relevant”.

The next version will be huge. No wait, the next one.

Initially, Tesla’s “FSD Beta” builds had no special versioning scheme versus the regular software update versions they’ve used for ages. However, at one point during 2021, Musk announced that Tesla would start designating each FSD Beta release with its own additional version number, indicating how many beta releases there had been. They decided to start doing this with “beta 8” – based on a rough counting of this being the 8th build released to beta testers. Rather than incrementing the number for each release, though, they immediately started having minor version number increments (e.g., 8.1, 8.2, 9.0, 9.1).

In October 2021, Musk revealed on Twitter that beta 10 would be arriving soon, and perhaps more importantly, beta 11 would contain a particularly major change – the unification of the highway mode (which functioned the same on beta builds as in regular production builds) with the new FSD Beta “city streets” mode. This became known as the “single stack” release.

However, 10.0 was not followed by 11, but instead 10.1. And then 10.2. and then 10.3. Up to 10.12 - and beyond! Rather than go back on his promise that 11 would be the version with a unified highway + city stack, he’d simply insist that the team hold to the 10.x numbering until that unified stack was ready. It would end up taking longer than even the most conservative fans expected.

2022

Throughout 2022, Tesla continued to make some rather large changes and improvements to the software, while keeping the version number at 10.x. In fact, each 10.x release was a significant update, with smaller 10.x.y updates (e.g., 10.3.1) coming in between.

At Tesla’s 2022 shareholder meeting, Musk announced that 10.12 would be followed not by 10.13, but by 10.69 – a “joke” meant to convey that this would be an especially good release. Musk liked this joke so much that for the next six months or so, new beta builds with significant changes would be “10.69.x”, with minor changes showing up in builds labeled with increasingly ridiculous version numbers, like “10.69.2.1”. The “10” was frozen because of Musk’s single stack V11 promise, and the 69 was frozen because, well, Musk has the sense of humor of a 13-year-old, and presumably because going to 10.70 would be anticlimactic.

For the holidays, we got a special jump to 10.69.25, because why not.

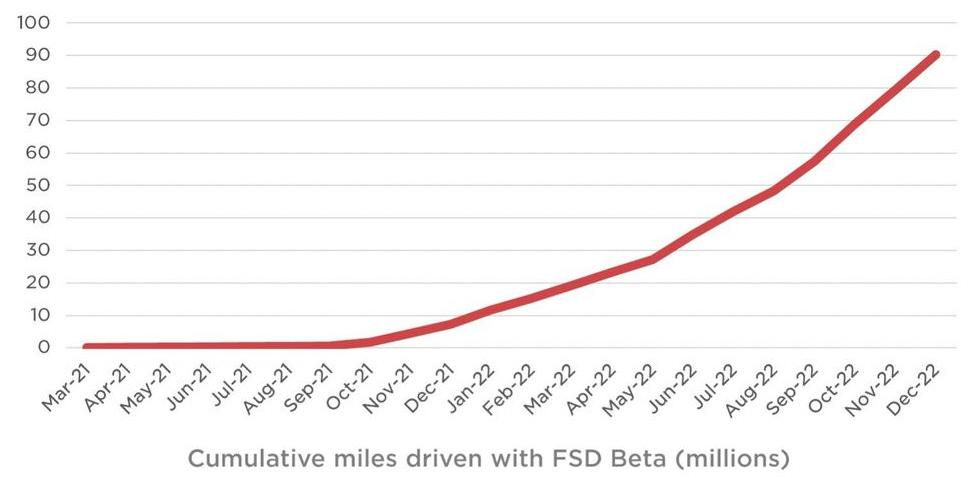

In Tesla’s Q4 investor deck, the company revealed that the FSD Beta had rolled out to 400,000 owners in the US + Canada, with a cumulative distance driven using the beta over 90 million miles.

Today (March 2023)

Throughout the 2+ years of FSD Beta releases, Tesla has made considerable improvements to basically every aspect of the software. They’ve also employed impressive new AI/ML capabilities to map the 3D environment around the car, to understand lane layouts and markings, and even to plan its actions. They’ve even adopted techniques similar to those used in Large Language Models like OpenAI’s GPT-3, creating an autoregressive transformer model that treats lane topologies like a special kind of language, predicting what’s on the other side of an intersection, for example, in a manner analogous to the way GPT-3 predicts words. From a technical achievement perspective, it’s amazing what they’ve done – and that they’ve done it with 2016 sensors on a 2018 computer.

And yet, it’s still very much a level 2 system. And a “beta” one at that. Indeed, the same “may do the wrong thing at the worst time” warning is still present, and for good reason. It also still has scenarios it doesn’t even attempt to handle – such as reacting to emergency vehicles (e.g., pulling over to let them pass).

Valid and Invalid Criticism

There are reasonable questions to be asked and debates to be had about Tesla’s rollout of the “FSD Beta”. These includes concerns about the naming, whether having untrained customers operating a “beta” of such a sophisticated system is wise/safe, whether it’s fair for Tesla to recognize deferred FSD revenue for L2 features and/or “beta” releases, whether customers are entitled to refunds, and so on.

There’s also a lot of sensationalism and blatantly dishonest bunk out there - none more pervasive than Dan O’Dowd’s incredibly dishonest TV ads and social media campaigns. I see a lot of otherwise smart people deciding to uncritically believe (and spread) misleading claims like Dan’s, and I suspect part of this comes down to people simply not liking Tesla and/or Musk (sometimes with good reason, especially in the case of the latter). However, even if you have good reasons to dislike Musk, this isn’t a good reason to believe or to spread convenient untruths.

NHTSA, the federal agency tasked with regulating such things in the US, has been testing the FSD Beta and providing feedback to Tesla. In particular, they recently identified four areas of concern where they believe the system’s behavior could make it difficult for a driver to avoid doing something illegal and potentially unsafe. Tesla initially said they disagreed that an attentive driver would have such difficulty, but they agreed to issue a voluntary “recall” (delivered as an over-the-air software update) nonetheless.

I think the issues highlighted by NHTSA are good ones, and I’m glad to see they’re getting traction on having Tesla prioritize fixes for them. Some of these are issues that beta testers have been complaining about for two years now, such as the poor behavior the system exhibits when transitioning between different speed limits. The beta takes a too-literal approach to increasing speed limits, so if you’re traveling 25mph and the speed limit changes to 55mph, it will not begin accelerating until you pass the 55mph sign. This may meet the letter of the law, but it isn’t how humans drive. Beginning to accelerate a bit sooner will make the behavior more natural and less likely to induce road rage.

More importantly, though, the current software takes the same approach to reducing speed limits. It doesn’t adjust the cruise/target speed down until the instant you pass the sign, and then takes its time decelerating to reach the new target speed. Indeed, it takes far too much time, in my experience. For sizable speed limit drops, I reliably have to take over and hit the brake pedal to get down to the new target speed in a reasonable timeframe (to avoid risking a speeding ticket) and then reengage the system. This seems like a relatively straightforward thing to fix, and I’m surprised it took NHTSA getting involved to make it a priority.

Personally, I think it’s great that NHTSA is involved, testing the system, and recommending improvements - while also not being overbearing about it. Unserious critics like O’Dowd like to complain that NHTSA hasn’t “banned” the software and suggest that this means NHTSA is asleep at the wheel, so to speak. But in reality, NHTSA is working with Tesla closely and there just isn’t a very good justification for banning any of this.

Beta 11 and the recall fixes

In the past few days, Tesla has begun rolling out the long-overdue “beta 11” update to their small inner ring of testers. We had some awareness that they were testing various 11.x builds with employees for several months now, but this is the first time a non-employee has received one of these builds. The version released is labeled as 11.3.1.

This version delivers the long-promised convergence of the off-highway mode (up till now, the only part that’s been different in the “FSD Beta”) and the on-highway mode, as well as an initial take at addressing the four NHTSA recall issues. Early accounts are quite promising, and I look forward to receiving an 11.x build, hopefully in the next few weeks. But it’s also apparent that there’s still a long road ahead to get to anything like the original “Full Self-Driving” promise.

Hardware 4 and what comes next

In the past couple of months, Tesla has begun rolling out a new hardware suite – dubbed “hardware 4” by the community. Tesla, however, has been completely silent on the topic. This new setup includes not just a new, even more powerful computer, but also heavily upgraded cameras, and a new, apparently custom-designed, radar (which uses the “Phoenix” name, but doesn’t seem to be an Arbe product - or at least is not marked as such). Model S and X vehicles with this equipment have begun being delivered to customers in the past couple of weeks.

Tesla, understandably, doesn’t want to “Osborne” their current supply of HW3.x vehicles, which may be the reason for their silence. However, many of us expected them to introduce this new hardware at their recent Investor Day event. Instead, we didn’t hear a peep about it. We’ve also learned that the vehicles arriving with this hardware still have the same number of cameras in the same locations as before, which surprised many of us, as we expected (and some rumors claimed) that camera locations would be changed and even some additional cameras added. I have a new theory about this, but regardless, the new hardware exists and is in customer hands now.

The only thing Musk has said about HW4 recently was a suggestion that they would likely not be making it available as a retrofit for existing vehicles. This was a huge disappointment to many, though it’s understandable that such an upgrade would be logistically very difficult. Still, for the cost that many of us have paid for “FSD”, we had hoped they’d have figured out a way.

With the advent of this new hardware, including the return of (a presumably much more capable) radar, plus substantially improved cameras and compute capacity, it now seems incredibly unlikely that current vehicles with FSD will ever offer an L3 or higher mode of operation. I wouldn’t even say it’s a sure thing that HW4 will get there, but it’s at least plausible. For HW3, I just can’t see Tesla daring to take responsibility for accidents caused by an actual autonomous mode, even a conditional L3 one. Certainly, it’s safe to say at this point that the full 1080p “self-driving” promise will not be delivered on HW3.

What does that mean for customers who’ve paid thousands of dollars for a promise that wasn’t fulfilled in a timely manner and which likely never will be fulfilled? Check back in, let’s say, 1-2 days probably (1-2 weeks definitely) for my thoughts on what Tesla should do to rectify the situation and chart an ambitious but realistic path forward.

Elon: “we expect the first operational robotaxis next year - with no one in them”.

Brandon: "that could mean he thought they would be testing a small number of robotaxis without passengers, or it could mean actual robotaxis with passengers but no driver. Parsing these kinds of Musk statements is its own art form"

There is no art form needed because the statement is crystal clear unless you're a mental contortionist trying to bend the statement into something that wasn't said. "No one in them" means no-one. Nobody. No driver. No Passengers. No cops. No sentient robots. Aka empty. Aka devoid of humans. Aka if you looked through the windows the seats would be empty. If you were to fill the car with poisonous gas, nobody would die. This is like an unintentional recreation of the famous Monty Python Dead Parrot Sketch: "is no more", "has ceased to be", "bereft of life, it rests in peace".

I understand you want to be perceived as an impartial, unbiased observer who isn't a fanboy or a hater in order to more credibly criticize FSD as likely never beling delivered later. But when you hedge and hem and haw over crystal clear, unambiguous language in order to create a false controversy of "Well both sides have valid interpretations" then your impartiality looks silly.

Just state the obvious: Elon Musk was wrong. You can debate whether he's lying or just woefully ignorant but there is no debate that he has been nothing but consistently, spectacularly unreliable in his predictions.