Are Teslas the most or least safe vehicles?

What the data says, and why you might be confused

Tesla has recently been in the news again, in part regarding the safety of its vehicles and/or drivers. Depending on whom you listen to, you may have heard that Tesla makes the safest vehicles on the road, or you may have heard that they’re a menace and danger to everyone. So, which is true? Let’s find out.

To assess the safety of vehicles or vehicles makes, there are two main things we can look at:

Safety tests by reputable testing agencies (such as NHTSA, IIHS, Euro NCAP, Australian NCAP, AAA, etc). These test both how well the vehicles protect their occupants and, in some cases, how well they protect other road users.

Real-world data about the number and severity of accidents involving the vehicles in actual use.

Let’s look at both. Then we can review some of the recent claims you may have seen in Rolling Stone or in articles referencing a LendingTree study which purportedly shows that Tesla, RAM, and Subaru drivers have more accidents than drivers of other vehicles.

Professional testing agency results

Who tests what

In the US, we have two primary testing agencies who perform vehicle safety tests - the National Highway Traffic Safety Administration (NHTSA) and the Insurance Institute for Highway Safety (IIHS). In Europe, even more extensive testing is performed by the European New Car Assessment Program (Euro NCAP). A similar program exists in Australia (ANCAP), and some other regulatory bodies around the world also perform these kinds of tests.

These tests primarily fall into three categories:

Occupant safety crash testing, in which vehicles are subjected to a variety of crashes, with “crash test dummies” and numerous sensors used to ascertain the level of protection the vehicle affords its occupants in various crash scenarios

Vulnerable Road User (VRU) crash testing, in which vehicles are crashed into “dummies” outside the vehicle, simulating pedestrians or cyclists, with various sensors attached

Active Safety tests, which aim to ascertain how effective a vehicle’s Advanced Driver Assistance System (ADAS) is at preventing or reducing the severity of accidents with other vehicles or VRUs (e.g., pedestrians and cyclists).

Crash testing results

Tesla has a long history of achieving exemplary results in both kinds of crash tests as well as ADAS tests. This pattern dates back to the original Model S, which was reported to have been so tough that it broke test equipment. It was, in 2013, the safest vehicle NHTSA had ever tested. Indeed, it didn’t just break machinery, but also broke the testing scale, achieving a 5.4 rating on a scale that was only supposed to go up to 5 (though NHTSA’s official statement was that they don’t differentiate at that level of detail).

In 2017, the Model X took the crown as the highest safety rated SUV the NHTSA had tested, and by a wide margin. It was the only SUV at the time to have achieved a perfect score in every test. In 2019, it would go on to be named a “Standout Performer” by the Euro NCAP, whose official statement said:

The standout performer of this round is undoubtedly Tesla’s Model X, scoring 94% for Safety Assist, the same as the Model 3 scored early this year. This makes the two Teslas the best performers in this part of the assessment against Euro NCAP’s most recent protocols. The recently updated Model X also achieved an impressive 98% for adult occupant protection, making it strong contender for Best in Class this year.

In addition to the highest Safety Assist and Adult Occupant Protection, the Child Occupant Protection and Vulnerable Road User Protection tests yielded very high scores.

The story is similar with the Model 3 and Model Y, as well as with the second generation Model S and X vehicles launched in 2021, which again raised the bar in all categories.

If we look at the latest available safety ratings from the agencies, we find the following results:

Sources: NHTSA | IIHS | Euro NCAP

As you can see, each of Tesla’s currently available vehicles has achieved superb safety ratings from these agencies in all categories.

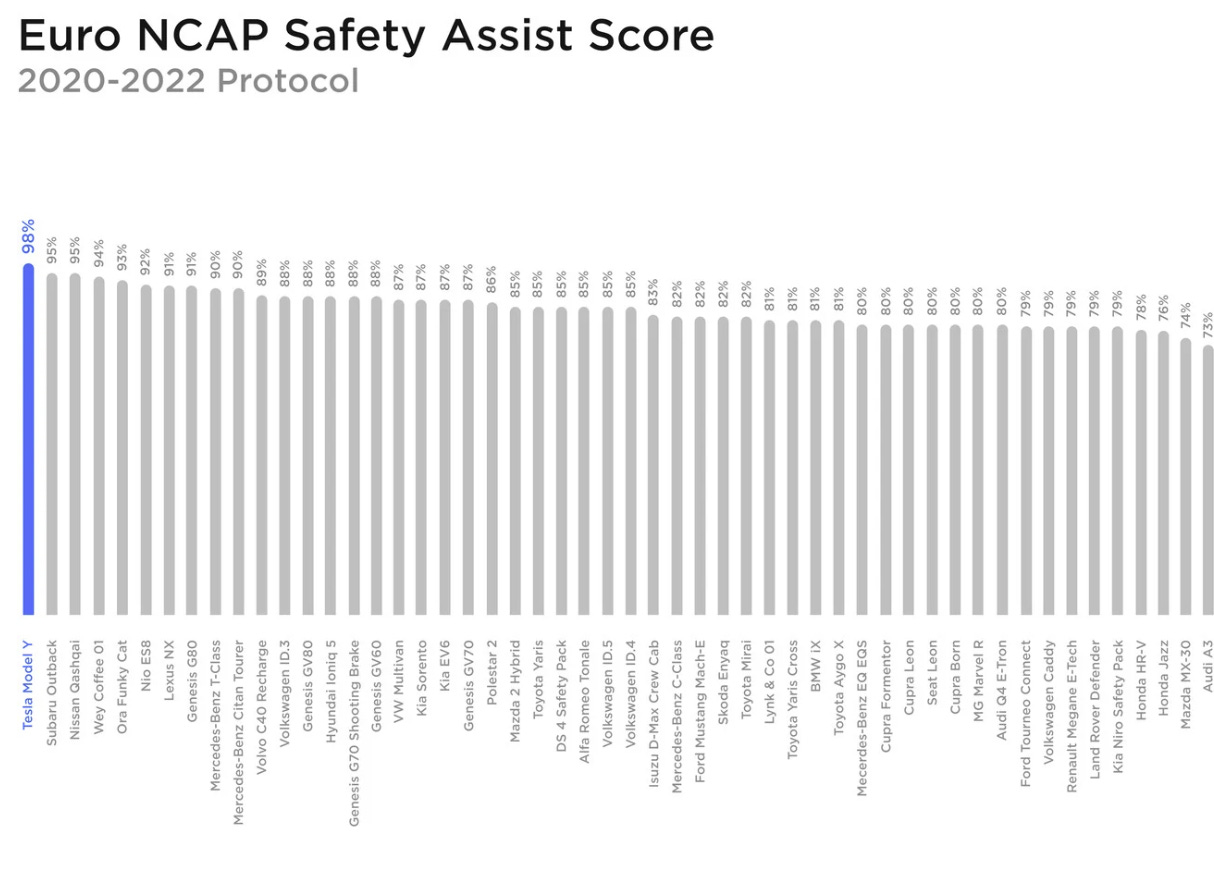

Looking more closely as the Euro NCAP results, some especially impressive details stand out. For example, the Model Y achieves the highest overall score of any vehicle tested to that point.

That held until the 2022 Model S was tested, which then took the crown.

Both vehicles achieved the highest ever Safety Assist score, as well:

AAA ADAS tests

In addition to the above, in May 2022, the American Automobile Association (AAA) published a study called Evaluation of Active Driving Assistance Systems, in which they tested ADAS-equipped vehicles from three manufacturers: Tesla, Subaru, and Hyundai.

In this study, they performed tests similar to the Active Safety tests conducted by the Euro NCAP, but with a twist - they tested with the respective systems’ Level 2 partial automation features enabled (AKA, “Autopilot”, or more specifically, “Autosteer” in the Tesla). They also tested unique scenarios, including:

Approaching a 20MPH vehicle from behind while traveling 55MPH

Traveling 25MPH and having an oncoming vehicle traveling 15MPH cross into the test vehicle’s lane

Approaching a cyclist from behind while traveling 45MPH

Traveling 25MPH and having a cyclist suddenly accelerate into the vehicle’s path from the side

Here’s a summary of the test results:

Note that at the time, Tesla’s Autosteer system had no affordance for leaving its lane to avoid a crash. So, the fact that it slowed nearly to a stop before an oncoming vehicle crashed into it in its lane is really the best that could be expected. The other vehicles didn’t react at all and crashed at full speed.

Based on these results, cyclists should feel at ease around Tesla vehicles - but maybe not Subarus.

Since then, Tesla has been rolling out greater accident-avoidance capabilities. Their unfortunately named “FSD Beta” is capable of leaving its lane to avoid obstacles. Tesla has said they are working on bringing an advanced obstacle-avoidance capability based on the same technology to the regular Autosteer mode from the basic Autopilot package, though I don’t believe this has rolled out yet.

If you’re wondering why you haven’t heard about this test, perhaps you did. The media coverage was… not exactly informative, to say the least. Here are some example stories which covered this study:

AAA Crash Test Shows Driving Assistance Systems Still Underperform (motor1.com)

Cars With Active Driving Assistance Tech Crash During AAA Test (gizmodo.com)

Autonomous cars hit third of cyclists, all oncoming cars (The Register)

If you want to know why I lament the decline of journalism in the age of social media engagement metrics, these articles and headlines could be exhibit A.

Real-world data

Test results, of course, can only tell you so much. They may not, for example, tell you whether the vehicle’s design or marketing makes drivers more susceptible to distraction, or whether the vehicle’s safety features work as well in practice as they do in standardized tests.

Getting real-world data about accident rates for vehicles or vehicle makes is rather challenging. Many smaller accidents aren’t recorded or reported anywhere, and many non-fatal accidents aren’t recorded reliably perhaps aside from in various insurance company databases (in cases where insurance claims are filed, which doesn’t always happen).

There are two sources we can look at though: data from Tesla themselves, and data tracked and made available by the NHTSA.

Tesla’s data

Tesla is in a unique position here, as they’re the only large-scale auto manufacturer who has a cellular internet connection plus telematics system in every vehicle they sell. This has enabled them to publish Vehicle Safety Reports which provide a variety of data points we can look at.

The reports are broken down by quarter, and the most recent data is from the end of 2022. These days, Tesla publishes two main data points for each quarter:

Miles traveled between accidents “using Autopilot technology”

Miles traveled between accidents not “using Autopilot technology”

The former is understood to include usage of Tesla’s three L2 partial automation modes: Autosteer, Navigate On Autopilot, and the “Full Self-Driving Beta”. It remains unclear to me which bucket their L1 adaptive cruise mode, called Traffic Aware Cruise Control, is counted in.

Tesla says they count all accidents where an airbag deployed or an active safety restraint was activated. They also say they count any cases where Autopilot was engaged within 5 seconds before the crash as using Autopilot. This ensures that accidents following a last-second disengagement, by the driver or due to the system entering its fail-safe mode, are counted correctly.

Side note: Some have claimed that Tesla “deactivates” Autopilot in the instant before a crash to somehow avoid accountability or to manipulate these numbers. There is no reason to believe this is the case. As mentioned, Tesla counts such cases as using Autopilot. Further, as an L2 driver assistance system, the driver is always responsible for safe operation of the vehicle, and must be ready to take over or override the system without warning at any time. This is true for all L2 systems, even including those marketed as “hands free” from certain manufacturers. When someone says that such a system “deactivated” just before a crash, it means the system blared loud alarms with a “take over immediately” message and tried to slow or stop the vehicle. This is an important safety measure, designed to mitigate the severity of the crash and to ensure that the vehicle does not continue to drive after an impact (during which the driver may be incapacitated and/or the system damaged).

Back to the data. Tesla compares their numbers with the NHTSA-reported estimate for the average rate of similar severity accidents in the US.

For the non-Autopilot case, this seems like a fair enough comparison. Of course, it doesn’t mean that the difference is due entirely to Tesla’s safety features. As I previously detailed, other factors such as vehicle age, driver age, and locations where they’re used are all confounding factors here. Still, it provides about as good of real-world data as we can expect to get.

For the Autopilot case, things are more complicated. In addition to the confounds mentioned above, the main Autopilot L2 modes are primarily used on restricted access highways - that is, highways such as interstates, with a large median separating oncoming traffic, no cross traffic, no stop signs or traffic lights, and generally no pedestrians or cyclists. Thus, we should expect that there are far fewer accidents per mile for this subset of driving, because that’s what the data shows is the case for driving on those types of roads in general.

That said, the rollout of the so-called “Full Self-Driving Beta” has changed this situation somewhat, as it is intended for use on all manner of roadway. It is unclear if Tesla included FSD Beta usage in the above numbers. During the initial rollout starting in 2021 and expanding through 2022, Tesla required drivers to pass a virtual driving test of sorts by achieving and maintaining a high “safety score” over a minimum number of miles of driving. This was a reasonably prudent way to roll out the technology in its early iterations, and likely had the convenient side effect of minimizing the risk of increasing the number of “using Autopilot” accidents as the rollout occurred.

As of late 2022, Tesla made the FSD Beta available to most anyone who has a supported vehicle and has paid for the corresponding package. It will be interesting to see what effect this may have had on these data points if/when they release 2023 numbers.

NHTSA’s data

While I don’t think Tesla has intentionally falsified their data, I understand that some will simply not trust anything they say. Given the company CEO’s propensity for twisting the truth, I can’t entirely blame them. Further, even if we all did trust Tesla to not be deceptive, they could have mistakes in their data. Either way, I think attempts to validate their data are worthwhile.

In early 2021, I used the NHTSA’s Fatality Analysis Reporting System to compare Tesla’s rate of fatal accidents with those of other vehicles. Here’s a quick summary of my findings back then:

Newer vehicles were involved in fewer fatal accidents than older vehicles

More expensive vehicles were involved in fewer fatal accidents than less expensive ones

Tesla vehicles had similar fatal accident rates to equivalent Audi models (e.g., Model S versus A7/S7, Model 3 versus A4/S4) for the same model year (2018)

Tesla vehicles had less than half the fatal accident rate versus the 2018 Ford Focus

It’s been a while since I did that investigation, so how do things look today?

Methodology

For this analysis, I am largely repeating what I did in early 2021. The steps are:

Identify proxy vehicles such as the Audi A4/S4

Choose the most recent year for which fatal crash data is available (currently 2021)

Choose a model year prior to that year to use for comparison, so that approximately all of such vehicles were in use throughout the year we’re studying

Query FARS for the number of fatal accidents involving the chosen model(s) during the chosen analysis year

Look up the reported US sales figures for the chosen model(s) for the chosen model year

Divide the value found in step 4 by the value found in step 5 to determine a rate

Now, this methodology has limitations. One complication involves step 5, because manufacturers generally don’t report sales by model year but rather by fiscal or calendar year. To adjust for this as best I can, I look at the calendar year sales for Tesla (since they don’t use separate “model years” like other manufacturers, and generally just use manufacturing date as the model year for a given vehicle). For others, I calculate the rate twice using two numbers: first simply using the sales reported for associated calendar year, and second by counting the fourth quarter of the prior year and the three quarters that follow. Neither will yield a perfect count in either case, but between the two we should have an approximation sufficient for our needs.

At this time, the newest data NHTSA makes available via FARS is from 2021. I will thus focus on 2020 model year vehicles. For now, I’m looking again at the Audi A4 series, but I have swapped the Ford Focus for the Ford Fusion, as Ford discontinued the Focus in the US prior to the 2020 model year. Indeed, after computing these values I discovered that Ford ended production of the Ford Fusion in July 2020, however I believe this is the time of year they normally switch over to producing the next model year. As you’ll see, the accident rate is high enough for our purposes even if 2020 was a “short” model year for them.

The results seem consistent with what I saw two and a half years ago. Tesla’s fatal accident rate is nearly identical to that of the Audi A4 series, and far lower than a standard Ford mid-size sedan. In this case, the Ford’s accident rate is more than 4 times higher than the Tesla Model 3.

I looked briefly at the Model Y data, but it appears there was only one fatal accident involving a 2020 Model Y in 2021, with an accident rate of around 0.024 per 1K sales - or half that of the Model 3.

I decided to also look at both 2020 + 2021 Model Year data for 2021 accidents, and to look at some other vehicles more competitive with the Model Y. This could be slightly skewed by uneven sales rates throughout 2021, but the only case where I think that may have meaningfully impacted the comparison is the Mach-E (in its favor) as I call out below.

Here we see minimal change to the Model 3 fatal accident rate. The model Y’s rate is a bit higher, but the margin is fairly small. In this case, we find the Ford Escape in basically the exact same range as the Model Y. The Subaru Forester has a slightly higher rate. I included the Ford Mustang Mach-E as well, though it only began sales in 2021 (technically 3 were delivered in December 2020, apparently, and only 238 were delivered in January).

I didn’t redo the full comparison of the Model S, but for both the 2020 and 2021 model years, there was only one fatal accident in 2021. For the Model X, there were two.

One last point of clarification: These accident numbers are for accidents involving these vehicles. They are not accidents caused by these vehicles, nor are they accidents where an occupant in the subject vehicle was killed. Being counted here merely means that there was at least one fatality in the accident.

My takeaway from this data is that Tesla’s vehicles are on par with or sometimes better than vehicles in their class when it comes to the number of fatal accidents they’re involved in. Note that all of these accident numbers are, thankfully, quite small. That’s good, but it also means that many of the variances here are probably not statistically significant. Perhaps if I find more time later on I’ll see if I can compute standard deviations and p-values from the available data to assess that more rigorously, but given that there are so many confounds involved (e.g., drivers don’t choose their vehicles randomly, some vehicles are driven more or fewer miles than others, etc), and limits on how granular the data gets, it’s probably not worth the effort.

I think it’s safe to say that based on these data sources, there are no glaring issues with the rates of fatal accidents involving Tesla vehicles as of this 2021 data.

Is it possible that Tesla vehicles are more prone to involvement in non-fatal accidents? Perhaps. However, that does not show up in Tesla’s data, at least for accidents severe enough to cause airbag deployments or active restraints (e.g., seatbelt tensioners) to engage. Since there’s no evidence to support such a hypothesis, and some evidence to the contrary (though some may not trust the source), I would assume that’s not true to any meaningful degree until and unless some data arises supporting that idea.

What about those recent articles?

We’ve determined that all of Tesla’s vehicles excel at all manner of professional safety assessments, and that based on the available accident data, they seem to be at worst on par with new premium vehicles of a similar class, and at best moderately less prone to fatal accidents. Or, put another way, they are among the safest vehicles you can buy.

With that in mind, let’s take a look at three claims which have been making the rounds the past few days. They are:

Tesla issued a safety recall for 2 million cars to address NHTSA concerns about Autopilot misuse

A researcher analyzed Tesla’s safety report data and found that after correcting for road type and age, Autopilot increases accident rates by 11%

A new study shows that Tesla drivers have the highest accident rate of all vehicle brands

Let’s look at each of these one-by-one:

Claim 1: Tesla issued a safety recall of 2 million cars to address NHTSA concerns about Autopilot misuse

Verdict: True

Tesla issued a voluntarily recall to address concerns that the NHTSA raised about misuse of Autopilot. The changes include:

Suspending drivers’ access to Autopilot if they repeatedly fail to pay attention while using it. This borrows an existing mechanism from the “FSD Beta” program and applies it to all Autopilot usage

Increased restrictions on where and when Autosteer, the basic adaptive cruise control + lane centering mode can be activated

Details of this part of the change are unclear, and in my initial testing the system still allows you to engage Autosteer on a variety of non-highway roads

Increased strictness of Driver Monitoring checks (aka “nags”) when using Autosteer outside of restricted access highways

More aggressive driver monitoring and “nags” on non-highway roads

Much more aggressive checks and warnings when using it near intersections or other situations where it’s not recommended for use

Changes to the user interface for driver attention checks (“nags”) and related warning messages to make them even more prominent

Some have suggested that Tesla should go further and restrict use of Autosteer to restricted access highways. This is a tough call for a few reasons. I can see the appeal of doing so, but I think it’s important to point out that the majority of similar basic hands-on L2 models from other manufacturers impose no such restrictions. Instead, they leave it up to the judgement of the driver to decide where it is appropriate to use cruise control and lane centering.

Such a restriction likely would have been more valuable in the past, when Autosteer was a much simpler and limited solution (e.g., with no attempt at all to handle cross traffic). It could still be valuable these days, but the current Autopilot software actually incorporates a fair amount of the “FSD Beta” tech, which is how it knows to bug you more around intersections. This also means it actually does respond to cross traffic most of the time (but this is not a documented or guaranteed behavior, and you shouldn’t rely on it!).

Tesla’s “Navigate On Autopilot” mode is already restricted to use on supported restricted access highways. The “FSD Beta” has no such restrictions as it’s intended to work on any type of road (but still with a vigilant driver ready to intervene whenever needed).

The “recall” is an over-the-air software update which has already begun rolling out to affected vehicles. It does not require any special action from the owner, nor does it take away any functionality.

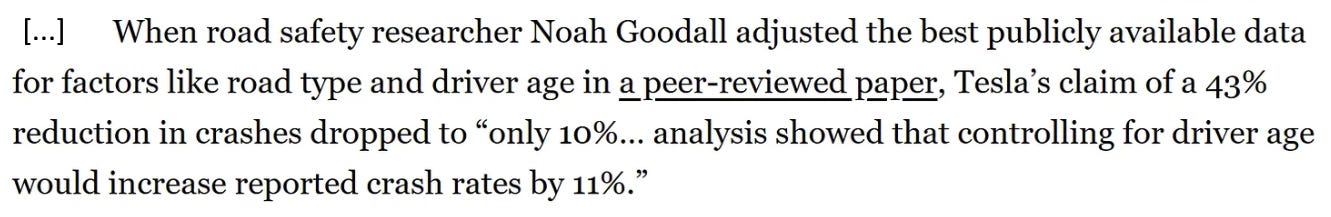

Claim 2: After correcting for road type and age, Autopilot increases accident rates by 11%

Verdict: Entirely false

This claim comes from long-time Tesla critic Ed Niedermeyer, in a piece for Rolling Stone entitled “Elon Musk's Big Lie About Tesla Is Finally Exposed (rollingstone.com)”. I’m not going to go through the entire piece, which has many issues, but will focus on the one that’s the most problematic.

The original piece had this paragraph:

Sound pretty bad for Tesla, right? The only problem is… the analysis says nothing of the sort. Here’s the chart from the paper:

For every quarter except for two in 2019, the road + age adjusted Autopilot values are still lower (i.e., better) than the equivalently adjusted non-Autopilot values.

I pointed this out on Threads to Rolling Stone’s editor, Noah Shachtman, and through other channels, but he declined to correct the problem. Noah Goodall, the author of the study, then spoke up to object to how his work was being misrepresented:

You can read the entire thread here.

Sometime later, the article received a footnote to say that it was updated to “clarify” Goodall’s results. However, the actual change to the article doesn’t clarify anything, and only slightly changes how the misleading number is presented:

It now uses quotation marks and a deceptively edited and cherry-picked quote to continue the same misrepresentation. In the actual paper, which you can read here, the text is saying that controlling for age increases the adjusted value by 11% versus only adjusting for road type. It is not saying that it yields an 11% increase over the baseline non-AP number.

As the study’s author says, his actual result showed that Tesla’s Autopilot/Autosteer solution results in reduced accidents even after controlling for road type and age. However, as both Noah and I have pointed out in our respective analyses, there are many limitations to this sort of adjustment. It’s instructive as far as conveying that a naive interpretation of Tesla’s numbers doesn’t tell the whole story, but it’s not an exact correction of their numbers, either.

My takeaway: Don’t trust Niedermeyer or, unfortunately, Rolling Stone.

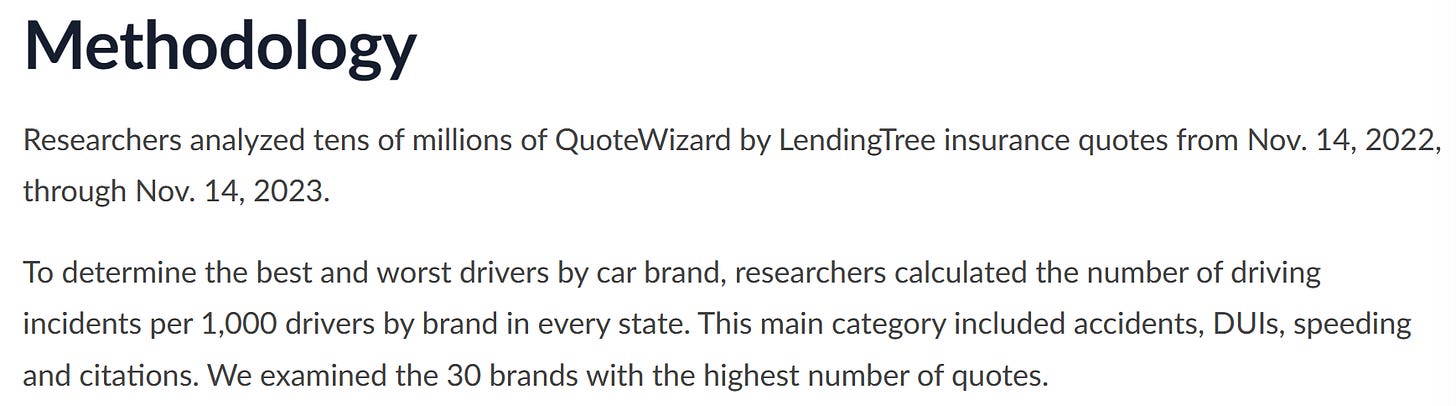

Claim #3: Tesla drivers have more accidents than any others

Verdict: Entirely false (previously “Almost certainly false”, see update inline below)

This claim seems to originate with a Forbes article:

Tesla Has The Highest Accident Rate Of Any Auto Brand (forbes.com)

It says:

Tesla drivers are the most accident-prone, according to a LendingTree analysis of 30 car brands. It found that Tesla drivers are involved in more accidents than drivers of any other brand. Tesla drivers had 23.54 accidents per 1,000 drivers. Ram (22.76) and Subaru (20.90) were the only other brands with more than 20 accidents per 1,000 drivers for every brand.

This has been referenced by others, including Electrek and Jalopnik.

Based on what we saw earlier, this may seem rather surprising. That’s because it’s not true.

To understand why, we have to look at the data source referenced by the Forbes article, which is this blog post on Lending Tree’s website:

Ram, Tesla and Subaru Have the Worst Drivers | LendingTree

Based on the headline, and even much of the content, you might think it supports the Forbes narrative. So, where’s the issue? That’s found in the methodology section of the piece.

Some of the articles covering this, like the Electrek piece, have stated that this data is based on an analysis of insurance claims. If it were, that would be insightful data indeed. However, this data is based on a review of insurance quotes.

Now, what does it mean to assess accident rates based on insurance quotes? They don’t provide any details. All they say is that it’s based on usage of their “QuoteWizard” online insurance shopping tool.

My initial interpretation is that this means they are looking at:

Requests for insurance quotes for the named vehicle makes

The driver’s specified accident history when requesting the quote

If this is true, it means that the accident rates being reported here are, at best, those of recent or prospective Tesla buyers, since most people only shop for car insurance when they’re also shopping for or in the process of buying a new car. Sure, some people buy the same brand of vehicle multiple times, but this certainly isn’t always the case, and is most often not the case for a new and rapidly growing brand like Tesla.

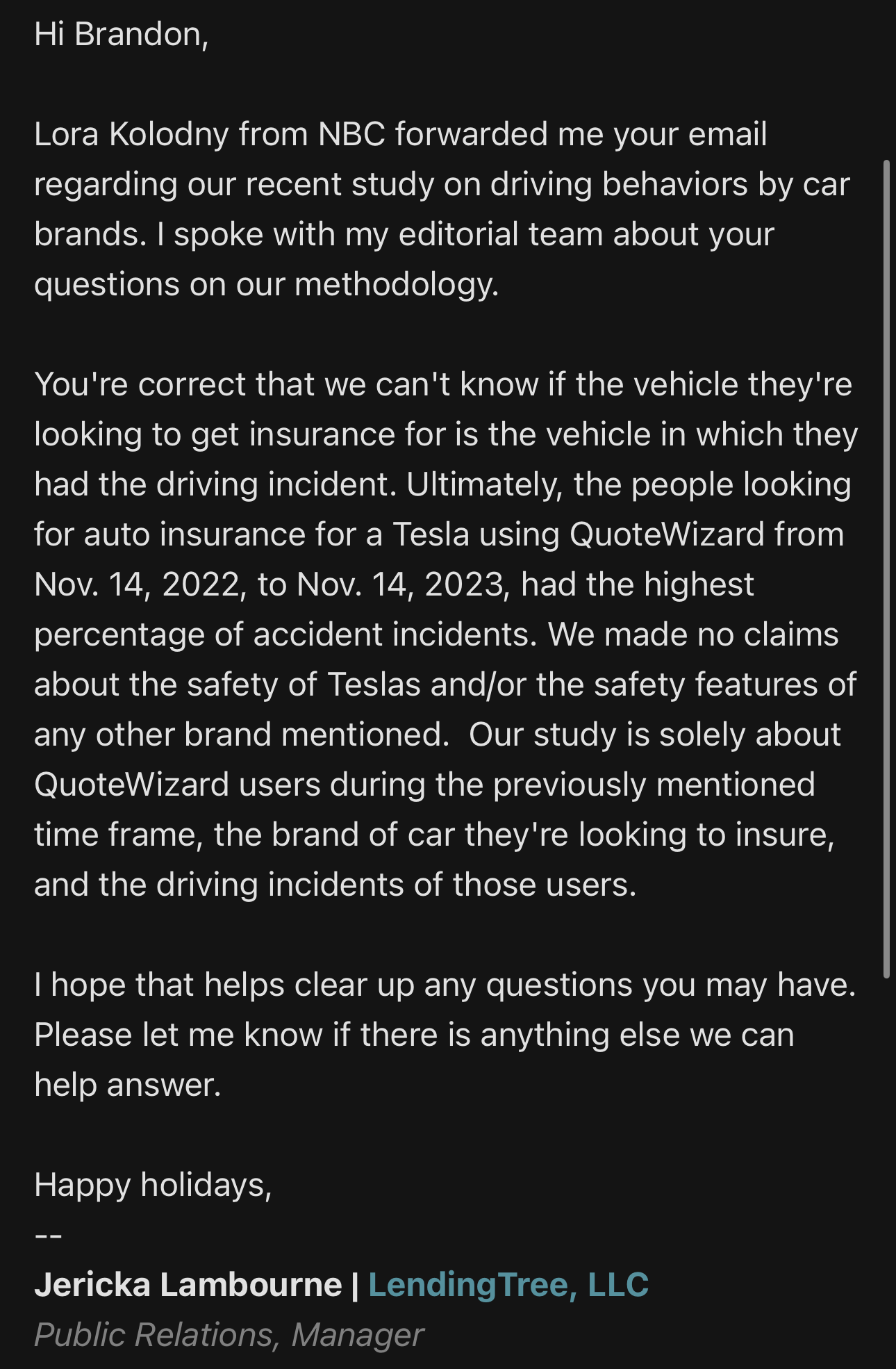

Update 12/21 4:27PM EST: Turns out I was correct. See confirmation from LendingTree at the end of the article. You can probably skip to that at this point, as the rest of this section is no longer relevant.

Is it possible they’re somehow looking at the driver’s actual accident history and what kind of vehicle it occurred in? Possibly, though I don’t really see how. I’ve gone through the QuoteWizard flow multiple times, including through the Progressive sign-up flow that it redirects me to after the initial steps. The latter asks for how many accidents I’ve been in and the dates where they occurred, but it does not ask about vehicle make or model.

Is it possible that they somehow pull data later in the process from insurance records or the DMV, and give this back to Lending Tree through some data sharing agreement? Perhaps. But without any details explaining this, it seems like a stretch. Note that when I’ve gone through this flow recently, I have provided fake data in order to assess potential insurance rates for hypothetical individuals with varied histories. Are these quotes included in their data set? There’s no way to know based on what they’ve said so far.

The researchers analyzed quotes from people looking to insure their own vehicles, and did not include accident or incident data involving drivers of rental cars, a spokesperson for LendingTree told CNBC by email on Tuesday.

Hardly clarifying. Indeed, the “looking to insure their own vehicles” part only reinforces my initial interpretation.

Let’s make a big leap here and say for a moment that they are somehow looking at the accident histories of these visitors to their website and ascribing each accident to a particular vehicle make or model. Would this be a good way to assess the accident rates of drivers of those vehicles in the general population?

I think not. I mentioned before that most people get insurance quotes when they’re buying (or have just bought) a new vehicle. I expect the next most common scenario for requesting a quote is when the person’s insurance rate has gone up - perhaps due to an accident. Or a ticket (which the “study” also looks at). This is clearly not going to be a representative sample of the general public.

Even taken as a non-representative subsample, using this data for comparison purposes within that heavily skewed subsample is unlikely to yield anything of value. The reason for that is these numbers are very small. They claim that the accident rate they’ve associated with Tesla vehicles is 24 out of 1,000, and most of the other makes have numbers around ~15-20 out of 1,000. Without raw data, we can’t determine a standard deviation or p-value for these differences, but given what we know - it seems likely they might as well be random.

If Lending Tree believes there’s actually something valuable to glean from this data, I ask that they do at least one, and ideally both, of these things:

Document their methodology in detail

Release anonymized raw data so that researches and analysts can validate their findings

If Lending Tree clarifies what they’re doing and/or provides data to back up their claims, I’ll happily update this and amplify whatever insights are justified based on what they share (even if it supports their claim that Tesla drivers get in the most accidents - as shocked as I would be).

In the meantime, I would advise you to ignore everything claimed in their blog post, and the various news stories referencing it, entirely.

Update 12/21 4:27PM EST

Thanks to Lora Kolodny from CNBC for connecting me with a LendingTree spokesperson. They confirmed my suspicions:

I look forward to CNBC, Forbes, Electrek, and the others printing retractions 😉

Additional sources:

NHTSA FARS query page (unfortunately, they don’t provide permalinks for queries)

US sales figures: Tesla Model 3 | Tesla Model Y | Audi A4 series | Ford Fusion | Ford Mustang Mach-E | Subaru Forester

Methodology for Normalizing Safety Statistics of Partially Automated Vehicles (Goodall, 2021)

I don't think the biggest issue with the LendingTree data is random error, but instead systemic error.

A minor accident is unlikely to appear on the carfax of a 20 year old Pontiac (Pontiac stopped making cars many years ago so they are all old) because who reports a minor accident on a $1000 car?

A minor accident on a newish $50k car is likely to be reported, as it is likely to go through insurance. Tesla has been growing faster than other car brands, the vast majority of their cars are still under 5 years of age, unlike basically every brand of car.

The fact that the report consistently listed brands that no longer make cars as "safe" and new car brands as "unsafe" I think shows that there is likely little random error, but the systemic error dominates the data.

Thank you for this great post. Indeed it is not Tesla drivers that had the highest rate of accidents, rather it was the people who got a quote for a Tesla car insurance on LendingTree. These people are looking to buy a Tesla car or renew their existing Tesla insurance. And the accident data is about their self reported past driving history. For example, someone who had BMW, Ford and Chevy cars in the past but now asked for a insurance quote for a Tesla on LendingTree is categorized as Tesla driver. He had an accident while using a BMW for instance, but his accident is counted under Tesla in this stupid study. You can't make this up. What a terrible research design!